Embarking on a journey into the realm of database design and implementation, specifically focusing on “how to coding MySQL database project,” unveils a world of structured data management. This guide offers a detailed exploration of crafting robust and efficient database solutions using MySQL, a widely adopted open-source relational database management system. From initial project planning to advanced optimization techniques, we will navigate the essential steps to build, secure, and deploy your MySQL database projects.

We will delve into the core aspects of database design, from creating Entity-Relationship Diagrams (ERDs) to selecting appropriate data types and implementing normalization. You’ll learn how to set up your MySQL environment, create schemas, and master CRUD (Create, Read, Update, Delete) operations. Furthermore, we will cover advanced SQL techniques, performance optimization strategies, security considerations, and deployment procedures. Real-world project examples and case studies will provide practical insights, equipping you with the knowledge and skills to excel in MySQL database development.

Project Planning & Requirements Gathering

The initial phase of any successful MySQL database project is project planning and requirements gathering. This stage is critical because it lays the foundation for the entire project, influencing its scope, features, and ultimately, its success. Careful planning and thorough requirements gathering minimize risks, ensure alignment with user needs, and provide a clear roadmap for development.

Essential Steps in Project Planning

Project planning establishes the groundwork for the project. These steps are vital for ensuring a well-defined and manageable project.

- Define Project Goals and Objectives: Clearly articulate the overall purpose of the project and the specific, measurable, achievable, relevant, and time-bound (SMART) objectives it aims to achieve. This includes identifying the problem the database will solve or the opportunity it will capitalize on. For example, if building an e-commerce database, the goal might be to improve order processing efficiency and the objective could be to reduce order processing time by 20% within six months.

- Identify Stakeholders: Determine all individuals or groups who have an interest in the project’s outcome. This includes end-users, project managers, developers, database administrators, and business owners. Understanding their needs and expectations is crucial.

- Determine Project Scope: Define the boundaries of the project, specifying what will be included and excluded. This prevents scope creep and ensures the project remains focused. For example, the initial scope of an e-commerce project might include product catalog management, order processing, and customer accounts, but exclude advanced features like real-time inventory updates in the first phase.

- Create a Project Timeline and Schedule: Develop a realistic timeline with key milestones and deadlines. This involves breaking down the project into smaller, manageable tasks and assigning resources. Tools like Gantt charts can be helpful for visualizing the schedule.

- Estimate Resources and Budget: Assess the resources required, including personnel, hardware, software, and training. Create a budget that accounts for all these costs. The budget should include contingencies for unexpected expenses.

- Risk Assessment: Identify potential risks that could impact the project, such as technical challenges, resource constraints, or changes in requirements. Develop mitigation strategies for each risk. For instance, a risk might be a lack of experienced MySQL developers; the mitigation strategy could involve training existing staff or hiring consultants.

Defining Project Scope and Identifying Key Features

Defining the project scope is essential for controlling project boundaries and preventing scope creep. Identifying key features ensures the application meets the core needs of its users.

Project scope encompasses the features and functionalities that will be included in the database application. It defines the boundaries of the project, preventing the addition of unnecessary features that could lead to delays and budget overruns.

To define the scope:

- Understand User Needs: Conduct thorough user interviews and surveys to understand the specific needs and requirements of the end-users.

- Prioritize Features: List all potential features and prioritize them based on their importance and impact on the project’s goals. Use techniques like MoSCoW (Must have, Should have, Could have, Won’t have) to categorize features.

- Document Scope: Create a clear and concise document outlining the project’s scope, including a list of included features and any excluded features.

- Get Stakeholder Approval: Obtain approval from all stakeholders on the defined scope to ensure everyone is aligned.

Identifying key features:

- Analyze User Stories: Translate user needs into user stories, which describe the functionality from the user’s perspective. For example, “As a customer, I want to be able to search for products by .”

- Create a Feature List: Based on the user stories, create a detailed list of features. This list should include descriptions of each feature and its purpose.

- Determine Data Models: Identify the data entities and their relationships that will be necessary to support the features. For example, in an e-commerce database, this would involve defining tables for products, customers, orders, and categories.

- Specify Functionality: Describe the specific functionalities of each feature, including input, processing, and output.

Examples of Applications Suitable for MySQL Database Projects

MySQL is a versatile database system suitable for a wide range of applications. Here are some examples:

- E-commerce Platforms: Managing product catalogs, customer accounts, orders, and payment processing. Examples include online stores, marketplaces, and subscription services.

- Content Management Systems (CMS): Storing and managing website content, including articles, images, and videos. Examples include WordPress, Joomla, and Drupal.

- Customer Relationship Management (CRM) Systems: Tracking customer interactions, managing sales leads, and providing customer support. Examples include Salesforce and Zoho CRM.

- Blog and Forum Applications: Storing and managing user-generated content, such as blog posts, comments, and forum discussions. Examples include phpBB and MyBB.

- Inventory Management Systems: Tracking stock levels, managing orders, and optimizing warehouse operations. Examples include small business inventory software.

- Social Media Platforms: Managing user profiles, posts, connections, and notifications. Examples include platforms with database-driven backends.

- Learning Management Systems (LMS): Managing course content, student enrollment, and assessment data. Examples include Moodle and Canvas.

Process for Gathering and Documenting User Requirements

Gathering and documenting user requirements is a critical process for ensuring the database application meets user needs. This process must be conducted with clarity and completeness.

- Identify User Groups: Identify the different user groups who will interact with the database application. This will help tailor the requirements gathering process to each group’s specific needs.

- Conduct User Interviews: Conduct interviews with representatives from each user group to understand their needs, expectations, and pain points. Ask open-ended questions to encourage detailed responses.

- Create Surveys and Questionnaires: Distribute surveys and questionnaires to a wider audience to gather additional input and validate findings from the interviews. Use a mix of closed-ended and open-ended questions.

- Analyze Existing Documentation: Review any existing documentation, such as business process documents, user manuals, and system specifications, to understand current workflows and data requirements.

- Observe User Behavior: Observe users performing their tasks to gain insights into their actual behavior and identify any usability issues.

- Document Requirements: Document all gathered requirements in a clear and concise format. Use user stories, use cases, or other appropriate methods to describe the functionality.

- Prioritize Requirements: Prioritize requirements based on their importance and impact on the project’s goals. This helps to manage scope and ensure that the most critical features are implemented first.

- Validate Requirements: Validate the documented requirements with the users to ensure accuracy and completeness. This involves reviewing the requirements with the users and getting their feedback.

- Obtain Approval: Obtain approval from all stakeholders on the documented requirements to ensure everyone is aligned.

Potential Challenges and Mitigation Methods

Several challenges can arise during project planning and requirements gathering. Proactive mitigation strategies are necessary to minimize their impact.

- Scope Creep: The tendency for the project scope to expand over time, leading to delays and budget overruns.

- Mitigation: Define a clear project scope at the outset, with a detailed list of included and excluded features. Establish a change management process to evaluate and approve any scope changes.

- Incomplete or Ambiguous Requirements: Requirements that are not clearly defined or are missing essential details.

- Mitigation: Conduct thorough user interviews and surveys. Use clear and concise language in requirements documentation. Validate requirements with users.

- Changing Requirements: User needs and priorities may change during the project lifecycle.

- Mitigation: Establish a change management process to evaluate and approve any changes. Prioritize requirements to accommodate changes without disrupting the project’s core functionality.

- Lack of Stakeholder Involvement: Insufficient participation from stakeholders can lead to misunderstandings and unmet expectations.

- Mitigation: Involve stakeholders throughout the project lifecycle. Regularly communicate project progress and solicit feedback. Conduct regular meetings and workshops.

- Technical Complexity: The project may involve complex technical challenges.

- Mitigation: Conduct a thorough technical assessment. Consult with experienced developers and database administrators. Use prototyping to test complex features.

- Resource Constraints: Insufficient resources, such as budget, personnel, or time, can hinder project progress.

- Mitigation: Create a realistic budget and schedule. Secure necessary resources early in the project. Prioritize tasks and features to manage resource limitations.

Setting up the MySQL Environment

Setting up the MySQL environment is a critical first step in any database project. This involves installing the MySQL server, configuring it for your specific needs, and setting up the necessary user accounts and permissions. Proper setup ensures a secure, efficient, and manageable database environment, laying the foundation for your project’s success.This section will guide you through the process of installing and configuring MySQL, creating databases and users, and using MySQL Workbench for administration.

We will also explore how to connect to your MySQL database from various programming languages.

Installing and Configuring MySQL Server

Installing and configuring the MySQL server involves several platform-specific steps. These steps vary depending on your operating system, but the general principles remain the same.For Windows:

- Download the MySQL installer from the official MySQL website. Choose the installer appropriate for your system (e.g., x86, x64).

- Run the installer. During the installation process, select the “Custom” installation type to have more control over the components installed.

- Choose the MySQL Server, MySQL Workbench, and any other desired components.

- Configure the server. This includes choosing the server configuration type (Development Computer, Server Computer, Dedicated Computer), setting the root password, and creating a user account.

- Start the MySQL server service. The installer typically provides an option to start the service automatically.

- Verify the installation by connecting to the MySQL server using MySQL Workbench or the command-line client.

For macOS:

- Download the MySQL Community Server DMG file from the official MySQL website.

- Open the DMG file and run the installer package.

- Follow the on-screen instructions. The installation process will guide you through the configuration.

- Set the root password during the configuration process.

- Start the MySQL server. The installer may offer an option to start the server automatically.

- Verify the installation by connecting to the MySQL server using MySQL Workbench or the command-line client.

For Linux (Debian/Ubuntu):

- Update the package list:

sudo apt update

- Install the MySQL server:

sudo apt install mysql-server

- During the installation, you will be prompted to set the root password.

- Secure the MySQL installation:

sudo mysql_secure_installation

This script will guide you through several security-related options, such as setting a strong password for the root user, removing anonymous users, disallowing remote root login, and removing the test database.

- Verify the installation by connecting to the MySQL server using the command-line client:

sudo mysql -u root -p

For Linux (CentOS/RHEL):

- Update the package list:

sudo yum update

- Install the MySQL server:

sudo yum install mysql-server

- Start the MySQL service:

sudo systemctl start mysqld

- Enable the MySQL service to start on boot:

sudo systemctl enable mysqld

- Set the root password:

sudo mysqladmin -u root password ‘new_password’

- Secure the MySQL installation (similar to Debian/Ubuntu):

mysql_secure_installation

- Verify the installation by connecting to the MySQL server using the command-line client:

mysql -u root -p

Creating Databases and User Accounts

Creating databases and user accounts with appropriate privileges is fundamental for organizing your data and controlling access. This involves using SQL commands to create the database, create users, and grant them the necessary permissions.To create a database, use the following SQL command:

CREATE DATABASE your_database_name;

To create a user account and grant privileges, use the following SQL commands:

- Create a user:

CREATE USER ‘your_username’@’localhost’ IDENTIFIED BY ‘your_password’;

Replace `your_username` with the desired username, `localhost` with the hostname or IP address from which the user will connect (e.g., `’%’` for any host), and `your_password` with a strong password.

- Grant privileges:

GRANT ALL PRIVILEGES ON your_database_name.* TO ‘your_username’@’localhost’;

This grants all privileges (SELECT, INSERT, UPDATE, DELETE, etc.) on all tables within the specified database to the user. Replace `your_database_name` with the name of the database you created. Use `FLUSH PRIVILEGES;` to reload the grant tables.

- Granting specific privileges: Instead of `ALL PRIVILEGES`, you can grant specific privileges for better security, such as `SELECT`, `INSERT`, `UPDATE`, and `DELETE`.

Using MySQL Workbench for Database Administration

MySQL Workbench is a graphical user interface (GUI) tool that simplifies database administration tasks. It provides a visual environment for managing databases, creating and modifying tables, running queries, and monitoring server performance.Common tasks in MySQL Workbench:

- Connecting to the database server: You can connect to your MySQL server by specifying the hostname, port, username, and password.

- Creating and managing databases: You can create, delete, and modify databases using the schema editor.

- Creating and managing tables: You can visually design tables, define columns, set data types, and add indexes. The table editor provides an intuitive interface for defining the structure of your tables.

- Running SQL queries: You can execute SQL queries in the query editor. The query editor also provides features like syntax highlighting and auto-completion.

- Monitoring server performance: You can monitor server performance metrics, such as CPU usage, memory usage, and connection statistics. This helps you identify potential performance bottlenecks.

- Importing and exporting data: You can import data from various formats (CSV, SQL, etc.) and export data to different formats.

Example: Creating a table in MySQL Workbench. You can visually create a table by right-clicking on the database schema and selecting “Create Table”. A visual editor will appear, allowing you to add columns, define data types, and set constraints. For example, to create a table named `customers` with columns like `id` (INT, PRIMARY KEY, AUTO_INCREMENT), `name` (VARCHAR), and `email` (VARCHAR), you would use the table editor to define each column’s attributes.

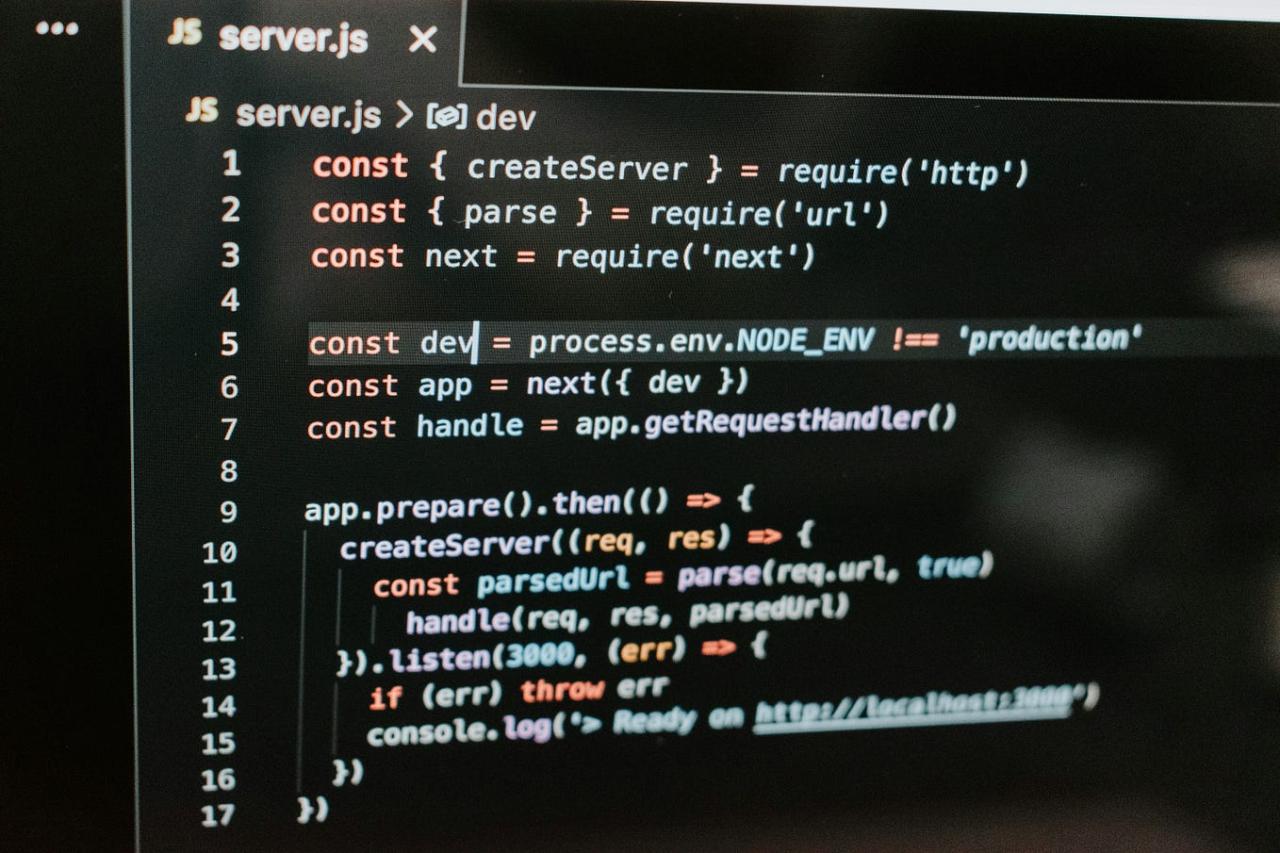

Connecting to the MySQL Database from Programming Languages

Connecting to the MySQL database from a programming language requires using a database connector or driver specific to that language. The process typically involves importing the necessary library, establishing a connection to the database server, executing SQL queries, and handling the results.Connecting from Python:

- Install the MySQL Connector/Python:

pip install mysql-connector-python

- Import the library:

import mysql.connector

- Establish a connection:

mydb = mysql.connector.connect( host="localhost", user="your_username", password="your_password", database="your_database_name" ) - Create a cursor object:

mycursor = mydb.cursor()

- Execute SQL queries:

mycursor.execute(“SELECT

– FROM your_table_name”) - Fetch results:

myresult = mycursor.fetchall()

- Close the connection:

mydb.close()

Connecting from PHP:

- Ensure the MySQL extension is enabled in your PHP configuration (php.ini). Often, this is enabled by default.

- Establish a connection:

<?php $servername = "localhost"; $username = "your_username"; $password = "your_password"; $dbname = "your_database_name"; // Create connection $conn = new mysqli($servername, $username, $password, $dbname); // Check connection if ($conn->connect_error) die("Connection failed: " . $conn->connect_error); ?> - Execute SQL queries:

<?php $sql = "SELECT - FROM your_table_name"; $result = $conn->query($sql); if ($result->num_rows > 0) // output data of each row while($row = $result->fetch_assoc()) echo "id: " . $row["id"]. " -Name: " . $row["name"]. " -Email: " . $row["email"]. "<br>"; else echo "0 results"; $conn->close(); ?> - Close the connection: The example above includes closing the connection.

Connecting from Java:

- Include the MySQL Connector/J library in your project. You can download the JAR file from the MySQL website and add it to your project’s classpath. Alternatively, use a dependency management tool like Maven or Gradle.

- Import the necessary classes:

import java.sql.*;

- Establish a connection:

Connection conn = null; try String url = "jdbc:mysql://localhost:3306/your_database_name"; // Replace with your database URL String user = "your_username"; String password = "your_password"; conn = DriverManager.getConnection(url, user, password); catch (SQLException e) e.printStackTrace(); - Create a statement object:

Statement stmt = conn.createStatement();

- Execute SQL queries:

ResultSet rs = stmt.executeQuery(“SELECT

– FROM your_table_name”); - Process the results:

while (rs.next()) int id = rs.getInt("id"); String name = rs.getString("name"); String email = rs.getString("email"); System.out.println("ID: " + id + ", Name: " + name + ", Email: " + email); - Close the connection:

try if (rs != null) rs.close(); if (stmt != null) stmt.close(); if (conn != null) conn.close(); catch (SQLException e) e.printStackTrace();

MySQL Server Configuration Options

MySQL server configuration options control various aspects of the server’s behavior, including performance, security, and resource usage. These options are typically set in the MySQL configuration file (e.g., `my.cnf` or `my.ini`).

| Configuration Option | Description |

|---|---|

| `port` | Specifies the port number that the MySQL server listens on for incoming connections. The default is 3306. |

| `datadir` | Specifies the directory where the MySQL data files are stored. |

| `bind-address` | Specifies the IP address that the MySQL server should listen on. Setting it to `127.0.0.1` limits connections to the local machine, enhancing security. |

| `max_connections` | Limits the maximum number of concurrent connections to the MySQL server. |

| `innodb_buffer_pool_size` | Sets the size of the InnoDB buffer pool, which caches data and indexes for faster access. A larger buffer pool can improve performance, especially for read-heavy workloads. A common recommendation is to set it to 50-80% of the available RAM, depending on the workload and server resources. For example, on a server with 16GB of RAM, a value of 8GB-12GB might be appropriate. |

| `query_cache_size` | Sets the size of the query cache, which stores the results of SELECT queries. This can improve performance for frequently executed queries, but can also introduce overhead if the cache is frequently invalidated. This option has been deprecated in MySQL 8.0 and later versions. |

| `log_error` | Specifies the file where the MySQL server error log is written. |

| `slow_query_log` | Enables the slow query log, which logs queries that take longer than a specified time to execute. This is useful for identifying slow-performing queries that need optimization. The `long_query_time` variable determines the threshold for logging. |

| `sql_mode` | Sets the SQL mode, which controls the behavior of the MySQL server regarding SQL syntax and data validation. Different modes can be used to enforce stricter data types, prevent data truncation, and improve compatibility with other database systems. For example, setting `sql_mode=STRICT_TRANS_TABLES` enforces strict data type validation, which can prevent data corruption. |

Database Schema Creation & Data Population

Now that the MySQL environment is set up, the next crucial step involves designing and populating the database with data. This phase focuses on defining the structure of the database, creating tables, establishing relationships between them, and finally, inserting data into these tables. A well-designed schema and accurate data population are fundamental for the efficient operation and reliable results of any database-driven project.

This section details the necessary steps and provides practical examples to achieve these goals.

Creating Tables in MySQL

Creating tables involves defining the structure of the data, including the names and data types of columns, and constraints to ensure data integrity. The syntax for creating a table in MySQL is straightforward, but understanding the options is key to building a robust database.

The basic syntax for creating a table is as follows:

“`sql

CREATE TABLE table_name (

column1 datatype constraints,

column2 datatype constraints,

column3 datatype constraints,

…

);

“`

CREATE TABLE: This initiates the table creation process.table_name: This specifies the name of the table you are creating. It’s best practice to choose descriptive names.column1, column2, column3, ...: These represent the names of the columns within the table.datatype: This specifies the type of data that can be stored in the column (e.g., INT, VARCHAR, DATE).constraints: These define rules for the data in the column, such as primary keys, foreign keys, and data validation.

Here are some key constraints and their uses:

- PRIMARY KEY: Uniquely identifies each row in the table. A table can have only one primary key. This constraint ensures that the values in the primary key column(s) are unique and not null.

- FOREIGN KEY: Establishes a link between two tables. It references the primary key of another table, ensuring referential integrity.

- NOT NULL: Ensures that a column cannot contain a NULL value.

- UNIQUE: Ensures that all values in a column are unique.

- DEFAULT: Specifies a default value for a column if no value is provided during data insertion.

- CHECK: Specifies a condition that the data in a column must satisfy. Note: MySQL supports the CHECK constraint from version 8.0.16. Earlier versions ignore this constraint.

Example:

“`sql

CREATE TABLE Customers (

CustomerID INT PRIMARY KEY,

FirstName VARCHAR(255) NOT NULL,

LastName VARCHAR(255) NOT NULL,

Email VARCHAR(255) UNIQUE,

City VARCHAR(255) DEFAULT ‘Unknown’

);

“`

This SQL statement creates a table named `Customers` with columns for `CustomerID` (primary key), `FirstName`, `LastName`, `Email` (unique), and `City` (with a default value).

Writing SQL Queries for Data Manipulation

Data manipulation involves inserting, updating, and deleting data within the database. SQL provides specific commands for each of these operations. Understanding these commands is crucial for managing and maintaining the data stored in your database.

- INSERT: Used to add new rows of data into a table.

- UPDATE: Used to modify existing data in a table.

- DELETE: Used to remove rows from a table.

Here are examples of how to use these commands:

* INSERT:

“`sql

INSERT INTO Customers (CustomerID, FirstName, LastName, Email, City)

VALUES (1, ‘John’, ‘Doe’, ‘[email protected]’, ‘New York’);

“`

This statement inserts a new row into the `Customers` table with the specified values.

– UPDATE:

“`sql

UPDATE Customers

SET City = ‘Los Angeles’

WHERE CustomerID = 1;

“`

This statement updates the `City` column to ‘Los Angeles’ for the customer with `CustomerID` 1.

– DELETE:

“`sql

DELETE FROM Customers

WHERE CustomerID = 1;

“`

This statement deletes the row from the `Customers` table where `CustomerID` is 1.

Implementing Data Validation Techniques

Data validation is critical for maintaining the integrity and reliability of the data stored in a database. It ensures that the data conforms to the predefined rules and constraints. Various techniques can be used to validate data during insertion or update operations.

- Data Type Validation: Ensures that the data entered matches the expected data type of the column. For instance, an `INT` column should only accept integer values.

- Constraints: Constraints like `NOT NULL`, `UNIQUE`, and `CHECK` enforce specific rules on the data.

- Triggers: Triggers can be used to perform more complex validation logic before or after data modification operations.

- Application-Level Validation: Validation can also be performed in the application code before sending data to the database. This is often used to validate data that is not easily validated with database constraints.

Example:

“`sql

— Using CHECK constraint (MySQL 8.0.16+)

CREATE TABLE Orders (

OrderID INT PRIMARY KEY,

OrderDate DATE,

TotalAmount DECIMAL(10, 2),

CHECK (TotalAmount >= 0)

);

“`

This example uses a `CHECK` constraint to ensure that the `TotalAmount` for each order is not negative. If you are using an older version of MySQL, you will have to implement data validation through other means, such as triggers or within your application code.

Importing Data from External Sources

Importing data from external sources is a common task when populating a database. MySQL provides several methods for importing data, including importing from CSV files and Excel spreadsheets.

* Importing from CSV Files: The `LOAD DATA INFILE` statement is used to import data from CSV files.

“`sql

LOAD DATA INFILE ‘/path/to/your/file.csv’

INTO TABLE Customers

FIELDS TERMINATED BY ‘,’

ENCLOSED BY ‘”‘

LINES TERMINATED BY ‘\n’

IGNORE 1 ROWS; — Skips the header row, if any

“`

This statement imports data from the specified CSV file into the `Customers` table. The `FIELDS TERMINATED BY`, `ENCLOSED BY`, and `LINES TERMINATED BY` clauses specify how the data is formatted in the CSV file. The `IGNORE 1 ROWS` clause skips the header row.

* Importing from Excel Spreadsheets: Importing directly from Excel is not a native feature of MySQL. You typically need to export the Excel data to a CSV file first and then import it using the `LOAD DATA INFILE` statement. Alternatively, you can use tools or libraries in your programming language of choice to read the Excel file and then use SQL `INSERT` statements to populate the database.

Designing a SQL Script for Database Schema

Designing a SQL script for a sample project involves creating the table definitions, including column names, data types, and constraints. The following example demonstrates a sample SQL script for a simple e-commerce database.

“`sql

— Create the database (if it doesn’t exist)

CREATE DATABASE IF NOT EXISTS e_commerce_db;

— Use the database

USE e_commerce_db;

— Create the Customers table

CREATE TABLE Customers (

CustomerID INT PRIMARY KEY AUTO_INCREMENT,

FirstName VARCHAR(255) NOT NULL,

LastName VARCHAR(255) NOT NULL,

Email VARCHAR(255) UNIQUE,

Phone VARCHAR(20),

Address VARCHAR(255),

City VARCHAR(255),

State VARCHAR(255),

ZipCode VARCHAR(10),

Country VARCHAR(255)

);

— Create the Products table

CREATE TABLE Products (

ProductID INT PRIMARY KEY AUTO_INCREMENT,

ProductName VARCHAR(255) NOT NULL,

Description TEXT,

Price DECIMAL(10, 2) NOT NULL,

ImageURL VARCHAR(255),

CategoryID INT,

FOREIGN KEY (CategoryID) REFERENCES Categories(CategoryID)

);

— Create the Categories table

CREATE TABLE Categories (

CategoryID INT PRIMARY KEY AUTO_INCREMENT,

CategoryName VARCHAR(255) NOT NULL

);

— Create the Orders table

CREATE TABLE Orders (

OrderID INT PRIMARY KEY AUTO_INCREMENT,

CustomerID INT NOT NULL,

OrderDate DATETIME DEFAULT CURRENT_TIMESTAMP,

TotalAmount DECIMAL(10, 2),

FOREIGN KEY (CustomerID) REFERENCES Customers(CustomerID)

);

— Create the OrderItems table (linking orders and products)

CREATE TABLE OrderItems (

OrderItemID INT PRIMARY KEY AUTO_INCREMENT,

OrderID INT NOT NULL,

ProductID INT NOT NULL,

Quantity INT NOT NULL,

Price DECIMAL(10, 2) NOT NULL,

FOREIGN KEY (OrderID) REFERENCES Orders(OrderID),

FOREIGN KEY (ProductID) REFERENCES Products(ProductID)

);

— Example data population (INSERT statements)

INSERT INTO Customers (FirstName, LastName, Email, Phone, Address, City, State, ZipCode, Country) VALUES

(‘John’, ‘Doe’, ‘[email protected]’, ‘123-456-7890’, ‘123 Main St’, ‘Anytown’, ‘CA’, ‘91234’, ‘USA’),

(‘Jane’, ‘Smith’, ‘[email protected]’, ‘987-654-3210’, ‘456 Oak Ave’, ‘Somecity’, ‘NY’, ‘10001’, ‘USA’);

INSERT INTO Categories (CategoryName) VALUES

(‘Electronics’),

(‘Clothing’),

(‘Books’);

INSERT INTO Products (ProductName, Description, Price, CategoryID) VALUES

(‘Laptop’, ‘High-performance laptop’, 1200.00, 1),

(‘T-shirt’, ‘Cotton T-shirt’, 25.00, 2),

(‘The Lord of the Rings’, ‘Fantasy novel’, 20.00, 3);

INSERT INTO Orders (CustomerID, TotalAmount) VALUES

(1, 1200.00),

(2, 25.00);

INSERT INTO OrderItems (OrderID, ProductID, Quantity, Price) VALUES

(1, 1, 1, 1200.00),

(2, 2, 1, 25.00);

“`

This script creates the database, the tables (Customers, Products, Categories, Orders, and OrderItems), and includes example `INSERT` statements to populate the tables with initial data. The script defines primary keys, foreign keys, and data types to ensure data integrity and establish relationships between the tables. The `AUTO_INCREMENT` is used for automatically generating unique IDs for each new record in the primary key columns.

The `FOREIGN KEY` constraints establish relationships between tables, ensuring referential integrity. For instance, the `Orders` table’s `CustomerID` column references the `Customers` table’s `CustomerID` column.

Implementing CRUD Operations

Implementing Create, Read, Update, and Delete (CRUD) operations is fundamental to interacting with any database, including MySQL. These operations form the core of data management, allowing users to insert new data, retrieve existing data, modify existing data, and remove unwanted data. This section will delve into the specifics of writing SQL queries for CRUD, the importance of preventing SQL injection, the use of transactions for data consistency, and demonstrate these concepts with code examples.

Writing SQL Queries for CRUD Operations

CRUD operations are executed using specific SQL statements. Understanding these statements is crucial for effective database interaction.

- Create (INSERT): This operation adds new data to a table. The syntax involves specifying the table name and the values to be inserted for each column. For example:

INSERT INTO employees (employee_id, first_name, last_name, department_id) VALUES (101, 'John', 'Doe', 1);This statement inserts a new employee record into the `employees` table.

- Read (SELECT): This operation retrieves data from one or more tables. The `SELECT` statement is used with various clauses like `WHERE` (for filtering data), `ORDER BY` (for sorting), and `JOIN` (for combining data from multiple tables). Example:

SELECT

- FROM employees WHERE department_id = 1;This statement retrieves all columns (`*`) from the `employees` table for employees in department 1.

- Update (UPDATE): This operation modifies existing data in a table. It uses the `UPDATE` statement along with the `SET` clause to specify the columns to update and the `WHERE` clause to specify which rows to update. For example:

UPDATE employees SET last_name = 'Smith' WHERE employee_id = 101;This statement updates the `last_name` of the employee with `employee_id` 101 to ‘Smith’.

- Delete (DELETE): This operation removes data from a table. It uses the `DELETE` statement along with the `WHERE` clause to specify which rows to delete. For example:

DELETE FROM employees WHERE employee_id = 101;This statement deletes the employee record with `employee_id` 101.

Preventing SQL Injection Vulnerabilities with Prepared Statements

SQL injection is a common security vulnerability that occurs when user-supplied data is incorporated directly into SQL queries without proper sanitization. Prepared statements are a crucial defense against SQL injection. They separate the SQL query structure from the data, preventing malicious code from being executed.

- How Prepared Statements Work: Prepared statements involve two steps: preparing the query and executing it with parameters. The query structure is sent to the database server first, and then the data is sent separately. This separation ensures that any user-provided data is treated as data, not as executable code.

- Example in PHP:

<?php $servername = "localhost"; $username = "your_username"; $password = "your_password"; $dbname = "your_database"; try $conn = new PDO("mysql:host=$servername;dbname=$dbname", $username, $password); $conn->setAttribute(PDO::ATTR_ERRMODE, PDO::ERRMODE_EXCEPTION); // Prepare statement $stmt = $conn->prepare("SELECT - FROM employees WHERE last_name = ?"); // Bind parameters and execute $lastName = "Doe"; $stmt->execute([$lastName]); // Fetch results $result = $stmt->fetchAll(PDO::FETCH_ASSOC); foreach ($result as $row) echo "Employee ID: " . $row["employee_id"] . " -Name: " . $row["first_name"] . " " . $row["last_name"] . "<br>"; catch(PDOException $e) echo "Connection failed: " . $e->getMessage(); ?>In this PHP example, the `prepare()` method creates the prepared statement. The `execute()` method then substitutes the provided value (`$lastName`) into the query.

- Example in Python:

import mysql.connector mydb = mysql.connector.connect( host="localhost", user="yourusername", password="yourpassword", database="yourdatabase" ) mycursor = mydb.cursor() sql = "SELECT - FROM employees WHERE last_name = %s" val = ("Doe", ) mycursor.execute(sql, val) myresult = mycursor.fetchall() for x in myresult: print(x)Here, `%s` is a placeholder for the parameter, and the `execute()` method passes the data safely.

Using Transactions for Data Consistency

Transactions ensure that a series of database operations are treated as a single, atomic unit. Either all operations within the transaction succeed, or none of them do. This guarantees data consistency, particularly in complex operations involving multiple updates.

- How Transactions Work: A transaction begins with a `START TRANSACTION` command, followed by one or more SQL statements. If all statements succeed, the transaction is committed using the `COMMIT` command. If any statement fails, the transaction is rolled back using the `ROLLBACK` command, undoing all changes made within the transaction.

- Example in PHP:

<?php $servername = "localhost"; $username = "your_username"; $password = "your_password"; $dbname = "your_database"; try $conn = new PDO("mysql:host=$servername;dbname=$dbname", $username, $password); $conn->setAttribute(PDO::ATTR_ERRMODE, PDO::ERRMODE_EXCEPTION); // Begin transaction $conn->beginTransaction(); // SQL statements $sql1 = "UPDATE employees SET salary = salary - 1.10 WHERE employee_id = 102"; $conn->exec($sql1); $sql2 = "INSERT INTO salary_history (employee_id, old_salary, new_salary, date_updated) VALUES (102, 50000, 55000, NOW())"; $conn->exec($sql2); // Commit transaction $conn->commit(); echo "Transaction successful"; catch(PDOException $e) // Rollback transaction on error $conn->rollback(); echo "Transaction failed: " . $e->getMessage(); ?>In this example, two SQL statements are executed within a transaction. If either one fails, the entire transaction is rolled back, ensuring that the database remains in a consistent state. If the `UPDATE` operation on `employee_id` 102 fails, the `INSERT` operation will not be executed, and the database will remain unchanged.

- Example in Python:

import mysql.connector mydb = mysql.connector.connect( host="localhost", user="yourusername", password="yourpassword", database="yourdatabase" ) mycursor = mydb.cursor() try: mydb.start_transaction() sql1 = "UPDATE employees SET salary = salary - 1.10 WHERE employee_id = 103" mycursor.execute(sql1) sql2 = "INSERT INTO salary_history (employee_id, old_salary, new_salary, date_updated) VALUES (103, 60000, 66000, NOW())" mycursor.execute(sql2) mydb.commit() print("Transaction successful") except Exception as e: mydb.rollback() print(f"Transaction failed: e")This Python code similarly uses a transaction to ensure data integrity when updating an employee’s salary and recording the change in a salary history table. If any error occurs during the update or insert operations, the `rollback()` method reverts any changes made during the transaction, maintaining data consistency.

Code Snippets Demonstrating CRUD Operations

Below are examples demonstrating CRUD operations in PHP and Python.

- PHP Example:

<?php // Create operation $servername = "localhost"; $username = "your_username"; $password = "your_password"; $dbname = "your_database"; try $conn = new PDO("mysql:host=$servername;dbname=$dbname", $username, $password); $conn->setAttribute(PDO::ATTR_ERRMODE, PDO::ERRMODE_EXCEPTION); $sql = "INSERT INTO employees (first_name, last_name, department_id) VALUES ('Alice', 'Wonderland', 2)"; $conn->exec($sql); $last_id = $conn->lastInsertId(); echo "New record created successfully. Last inserted ID is: " . $last_id . "<br>"; // Read operation $stmt = $conn->prepare("SELECT - FROM employees WHERE employee_id = ?"); $stmt->execute([$last_id]); $result = $stmt->fetch(PDO::FETCH_ASSOC); echo "Employee: " . $result["first_name"] . " " . $result["last_name"] . "<br>"; // Update operation $sql = "UPDATE employees SET department_id=3 WHERE employee_id=$last_id"; $conn->exec($sql); echo "Record updated successfully<br>"; // Delete operation $sql = "DELETE FROM employees WHERE employee_id=$last_id"; $conn->exec($sql); echo "Record deleted successfully<br>"; catch(PDOException $e) echo "Connection failed: " . $e->getMessage(); ?>This PHP code demonstrates the four CRUD operations, connecting to the database, creating a new employee record, reading the record, updating its department, and finally deleting the record.

- Python Example:

import mysql.connector mydb = mysql.connector.connect( host="localhost", user="yourusername", password="yourpassword", database="yourdatabase" ) mycursor = mydb.cursor() # Create operation sql = "INSERT INTO employees (first_name, last_name, department_id) VALUES (%s, %s, %s)" val = ("Bob", "Builder", 1) mycursor.execute(sql, val) mydb.commit() print(mycursor.lastrowid, "record inserted.") last_id = mycursor.lastrowid # Read operation sql = "SELECT - FROM employees WHERE employee_id = %s" val = (last_id,) mycursor.execute(sql, val) myresult = mycursor.fetchone() print(myresult) # Update operation sql = "UPDATE employees SET department_id = %s WHERE employee_id = %s" val = (2, last_id) mycursor.execute(sql, val) mydb.commit() print(mycursor.rowcount, "record(s) affected") # Delete operation sql = "DELETE FROM employees WHERE employee_id = %s" val = (last_id,) mycursor.execute(sql, val) mydb.commit() print(mycursor.rowcount, "record(s) deleted")This Python code illustrates the same CRUD operations, creating a new employee, reading the employee details, updating the department ID, and deleting the employee, all performed through the MySQL connector.

Handling Database Errors and Exceptions

Proper error handling is essential for creating robust and user-friendly applications. It involves anticipating potential issues and providing informative feedback to users or logging errors for debugging.

- Error Handling Techniques:

- Try-Catch Blocks: These blocks are used to catch exceptions that may occur during database operations. This allows the application to gracefully handle errors instead of crashing.

- Error Messages: Providing clear and informative error messages helps users understand what went wrong and how to resolve the issue. For example, “Failed to connect to the database. Please check your connection settings.”

- Logging: Logging errors to a file or a monitoring system is crucial for debugging and tracking issues. This provides valuable information about the nature and frequency of errors.

- Exception Handling: Using exception handling to manage errors gracefully prevents abrupt program termination.

- Example in PHP:

<?php $servername = "localhost"; $username = "your_username"; $password = "your_password"; $dbname = "your_database"; try $conn = new PDO("mysql:host=$servername;dbname=$dbname", $username, $password); $conn->setAttribute(PDO::ATTR_ERRMODE, PDO::ERRMODE_EXCEPTION); $sql = "INSERT INTO employees (first_name, last_name, department_id) VALUES ('Alice', 'Wonderland', 2)"; $conn->exec($sql); echo "New record created successfully<br>"; catch(PDOException $e) echo "Error: " . $e->getMessage() . "<br>"; // Display error message // Log the error to a file or database error_log("Database error: " . $e->getMessage(), 3, "/var/log/php_errors.log"); ?>In this example, the `try-catch` block handles potential `PDOException` errors. If an error occurs, an error message is displayed to the user, and the error is logged to a file for later analysis.

- Example in Python:

import mysql.connector try: mydb = mysql.connector.connect( host="localhost", user="yourusername", password="yourpassword", database="yourdatabase" ) mycursor = mydb.cursor() sql = "INSERT INTO employees (first_name, last_name, department_id) VALUES (%s, %s, %s)" val = ("Bob", "Builder", 1) mycursor.execute(sql, val) mydb.commit() print(mycursor.lastrowid, "record inserted.") except mysql.connector.Error as err: print(f"Error: err") # Display error message # Log the error with open("database_errors.log", "a") as f: f.write(f"Error: err\n") finally: if 'mydb' in locals() and mydb.is_connected(): mycursor.close() mydb.close()This Python code demonstrates error handling using a `try-except` block. It catches `mysql.connector.Error` exceptions and displays an error message. The error is also logged to a file. The `finally` block ensures that the database connection is closed, even if an error occurs.

Advanced SQL Techniques

Mastering advanced SQL techniques is crucial for efficient and effective database management. These techniques empower developers to extract, manipulate, and analyze data with greater precision and flexibility. By utilizing these advanced features, one can optimize query performance, reduce data redundancy, and gain deeper insights into the stored information. This section delves into key advanced SQL techniques, providing practical examples to illustrate their application.

JOINs to Retrieve Data from Multiple Tables

JOINs are fundamental for combining data from two or more tables based on a related column. This enables the retrieval of comprehensive information that spans multiple tables. Different JOIN types offer distinct ways of combining data, influencing the final result set.

- INNER JOIN: Returns only the rows where there is a match in both tables based on the join condition. It effectively filters out rows that do not have a corresponding entry in the related table.

Example: Retrieve customer names and their orders. Assuming a `customers` table with `customer_id` and `customer_name` and an `orders` table with `order_id`, `customer_id`, and `order_date`.

SQL:

SELECT customers.customer_name, orders.order_id, orders.order_date

FROM customers

INNER JOIN orders ON customers.customer_id = orders.customer_id; - LEFT JOIN (or LEFT OUTER JOIN): Returns all rows from the left table and the matching rows from the right table. If there is no match in the right table, the columns from the right table will contain NULL values.

Example: Retrieve all customers and their orders, even if a customer has no orders.

SQL:

SELECT customers.customer_name, orders.order_id, orders.order_date

FROM customers

LEFT JOIN orders ON customers.customer_id = orders.customer_id; - RIGHT JOIN (or RIGHT OUTER JOIN): Returns all rows from the right table and the matching rows from the left table. If there is no match in the left table, the columns from the left table will contain NULL values.

Example: Retrieve all orders and the customer names, even if an order is not associated with a customer.

SQL:

SELECT customers.customer_name, orders.order_id, orders.order_date

FROM customers

RIGHT JOIN orders ON customers.customer_id = orders.customer_id; - FULL OUTER JOIN: Returns all rows from both tables, with matching rows combined. If there is no match, the missing columns will contain NULL values. Note that MySQL does not directly support `FULL OUTER JOIN`; however, it can be emulated using `UNION` with `LEFT JOIN` and `RIGHT JOIN`.

Example (Emulation): Retrieve all customers and all orders, combining matching rows and including NULL values for unmatched rows.

SQL (MySQL emulation):

SELECT customers.customer_name, orders.order_id, orders.order_date

FROM customers

LEFT JOIN orders ON customers.customer_id = orders.customer_id

UNION

SELECT customers.customer_name, orders.order_id, orders.order_date

FROM customers

RIGHT JOIN orders ON customers.customer_id = orders.customer_id;

Aggregate Functions to Analyze Data

Aggregate functions are used to perform calculations on a set of values, returning a single result. These functions are essential for summarizing and analyzing data, providing insights into trends, patterns, and overall data characteristics.

- COUNT(): Returns the number of rows that match a specified criteria.

Example: Count the total number of orders in the `orders` table.

SQL:

SELECT COUNT(*) FROM orders; - SUM(): Returns the sum of a numeric column.

Example: Calculate the total revenue from all orders, assuming an `order_total` column in the `orders` table.

SQL:

SELECT SUM(order_total) FROM orders; - AVG(): Returns the average value of a numeric column.

Example: Calculate the average order total.

SQL:

SELECT AVG(order_total) FROM orders; - MIN(): Returns the minimum value of a column.

Example: Find the lowest order total.

SQL:

SELECT MIN(order_total) FROM orders; - MAX(): Returns the maximum value of a column.

Example: Find the highest order total.

SQL:

SELECT MAX(order_total) FROM orders;

Subqueries to Perform Complex Data Retrieval Operations

Subqueries, also known as nested queries, are queries embedded within another query. They enable the retrieval of data based on the results of another query, allowing for complex filtering and data manipulation. Subqueries can be used in various clauses, including `SELECT`, `FROM`, `WHERE`, and `HAVING`.

- Subqueries in the WHERE clause: Used to filter results based on the output of the subquery.

Example: Find all customers who have placed an order with a total amount greater than the average order amount.

SQL:

SELECT customer_id, customer_name

FROM customers

WHERE customer_id IN (SELECT customer_id FROM orders WHERE order_total > (SELECT AVG(order_total) FROM orders)); - Subqueries in the SELECT clause: Used to retrieve calculated values for each row.

Example: Retrieve customer names and the total number of orders placed by each customer.

SQL:

SELECT customer_name, (SELECT COUNT(*) FROM orders WHERE customers.customer_id = orders.customer_id) AS total_orders

FROM customers; - Subqueries in the FROM clause: Used to create derived tables or inline views.

Example: Retrieve the average order total for each customer, using a derived table.

SQL:

SELECT customer_id, AVG(order_total) AS average_order_total

FROM (SELECT customer_id, order_total FROM orders) AS order_details

GROUP BY customer_id;

Stored Procedures and Functions to Encapsulate Database Logic

Stored procedures and functions are precompiled SQL code blocks stored within the database. They encapsulate database logic, promoting code reusability, modularity, and improved performance. Stored procedures can accept input parameters and return output, while functions typically return a single value.

- Stored Procedures: A set of SQL statements that can be executed as a single unit. They can perform complex operations, including data manipulation, and can accept input parameters and return results.

Example: A stored procedure to add a new order.

SQL (MySQL):

CREATE PROCEDURE AddNewOrder (

IN customer_id INT,

IN order_date DATE,

IN order_total DECIMAL(10, 2)

)

BEGIN

INSERT INTO orders (customer_id, order_date, order_total)

VALUES (customer_id, order_date, order_total);

SELECT LAST_INSERT_ID() AS new_order_id;

END;To execute the procedure:

CALL AddNewOrder(123, '2024-01-20', 100.00); - Functions: A set of SQL statements that return a single value. They are typically used for calculations or data transformations.

Example: A function to calculate the total sales for a specific customer.

SQL (MySQL):

CREATE FUNCTION CalculateTotalSales (

customer_id INT

)

RETURNS DECIMAL(10, 2)

DETERMINISTIC

BEGIN

DECLARE total_sales DECIMAL(10, 2);

SELECT SUM(order_total) INTO total_sales

FROM orders

WHERE customer_id = customer_id;

RETURN total_sales;

END;To use the function:

SELECT customer_name, CalculateTotalSales(customer_id) AS total_sales

FROM customers;

HTML Table Illustrating Different SQL JOIN Types

The following table provides a visual representation of different SQL JOIN types and their results. The table uses simplified data for clarity, showcasing how each JOIN type combines data from two tables, `TableA` and `TableB`. `TableA` has columns `A_ID` and `A_Value`, while `TableB` has columns `B_ID` and `B_Value`.

| JOIN Type | SQL Example | Result |

|---|---|---|

| INNER JOIN | SELECT |

The result will include rows where `A_ID` in `TableA` matches `B_ID` in `TableB`. Only matching rows are included. Example Data (Illustrative): If `TableA` has rows (1, ‘ValueA1’), (2, ‘ValueA2’), (3, ‘ValueA3’) and `TableB` has rows (2, ‘ValueB2’), (3, ‘ValueB3’), the result would be: (2, ‘ValueA2’, 2, ‘ValueB2’) (3, ‘ValueA3’, 3, ‘ValueB3’) |

| LEFT JOIN | SELECT

|

The result will include all rows from `TableA` and matching rows from `TableB`. If there is no match in `TableB`, the columns from `TableB` will contain NULL values. Example Data (Illustrative): If `TableA` has rows (1, ‘ValueA1’), (2, ‘ValueA2’), (3, ‘ValueA3’) and `TableB` has rows (2, ‘ValueB2’), (3, ‘ValueB3’), the result would be: (1, ‘ValueA1’, NULL, NULL) (2, ‘ValueA2’, 2, ‘ValueB2’) (3, ‘ValueA3’, 3, ‘ValueB3’) |

| RIGHT JOIN | SELECT

|

The result will include all rows from `TableB` and matching rows from `TableA`. If there is no match in `TableA`, the columns from `TableA` will contain NULL values. Example Data (Illustrative): If `TableA` has rows (1, ‘ValueA1’), (2, ‘ValueA2’), (3, ‘ValueA3’) and `TableB` has rows (2, ‘ValueB2’), (3, ‘ValueB3’), the result would be: (2, ‘ValueA2’, 2, ‘ValueB2’) (3, ‘ValueA3’, 3, ‘ValueB3’) (NULL, NULL, 2, ‘ValueB2’) |

| FULL OUTER JOIN (Emulated) |

|

The result will include all rows from both `TableA` and `TableB`, with matching rows combined. If there is no match, the missing columns will contain NULL values. Example Data (Illustrative): If `TableA` has rows (1, ‘ValueA1’), (2, ‘ValueA2’), (3, ‘ValueA3’) and `TableB` has rows (2, ‘ValueB2’), (3, ‘ValueB3’), the result would be: (1, ‘ValueA1’, NULL, NULL) (2, ‘ValueA2’, 2, ‘ValueB2’) (3, ‘ValueA3’, 3, ‘ValueB3’) (NULL, NULL, 2, ‘ValueB2’) |

Optimizing Database Performance

Database performance is critical for the responsiveness and scalability of any application that relies on a database. Slow queries and inefficient database operations can lead to a poor user experience, increased server load, and ultimately, a failure to meet business objectives. This section focuses on techniques and strategies to optimize MySQL database performance, ensuring efficient data retrieval and manipulation.

Using EXPLAIN to Analyze Query Performance

Understanding how MySQL executes queries is the first step in optimization. The `EXPLAIN` statement provides valuable insights into the query execution plan.To use `EXPLAIN`, simply prepend it to your `SELECT` query:“`sqlEXPLAIN SELECT

FROM your_table WHERE your_column = ‘your_value’;

“`The output of `EXPLAIN` provides information about how MySQL intends to execute the query, including:

- `id`: The identifier for the query.

- `select_type`: The type of SELECT operation (e.g., SIMPLE, PRIMARY, SUBQUERY).

- `table`: The table being accessed.

- `type`: The join type. This is one of the most important indicators of query performance. Common types include:

- `ALL`: Full table scan (worst performance).

- `index`: Index scan.

- `range`: Range scan using an index.

- `ref`: Joins with a reference.

- `eq_ref`: Join using a primary key or unique index.

- `const`: Constant value (best performance).

- `possible_keys`: The indexes MySQL could use.

- `key`: The index MySQL actually chose to use.

- `key_len`: The length of the index used.

- `ref`: The columns used to join tables.

- `rows`: The estimated number of rows MySQL will examine. A higher number here often indicates performance issues.

- `Extra`: Additional information, such as “Using where” (filtering performed in the `WHERE` clause) or “Using index” (query uses an index to retrieve data).

By analyzing the `EXPLAIN` output, you can identify potential bottlenecks and areas for optimization. For example, if the `type` is `ALL`, it suggests a full table scan, which is usually inefficient and indicates a need for an index. If the `rows` value is very high, it may indicate a need for better filtering or indexing.

Optimizing SQL Queries

Optimizing SQL queries involves several techniques to improve their efficiency. These techniques include the strategic use of indexes, rewriting queries, and avoiding inefficient operations.

- Using Indexes: Indexes are crucial for fast data retrieval. They act like an index in a book, allowing the database to quickly locate the desired data without scanning the entire table.

- Create indexes on columns frequently used in `WHERE` clauses, `JOIN` conditions, and `ORDER BY` clauses.

- Choose appropriate index types (e.g., `BTREE` for most cases, `FULLTEXT` for text searches).

- Consider composite indexes (indexes on multiple columns) for queries that filter or sort on multiple columns.

- Regularly analyze and maintain indexes, removing unused or redundant indexes.

- Avoiding Full Table Scans: Full table scans are resource-intensive and should be avoided whenever possible.

- Ensure that queries use indexes effectively. Use `EXPLAIN` to verify index usage.

- Optimize `WHERE` clauses to filter data efficiently.

- Avoid using `SELECT

-` and specify only the necessary columns. - Consider using pagination for large result sets to avoid retrieving all data at once.

- Rewriting Queries: Sometimes, rewriting a query can significantly improve its performance.

- Simplify complex queries.

- Use `JOIN`s instead of subqueries when possible.

- Optimize `WHERE` clauses for efficient filtering.

- Avoid using functions on indexed columns in the `WHERE` clause, as this can prevent index usage. For example, instead of `WHERE YEAR(date_column) = 2023`, use `WHERE date_column BETWEEN ‘2023-01-01’ AND ‘2023-12-31’`.

Caching Frequently Accessed Data

Caching frequently accessed data can dramatically improve performance by reducing the load on the database.Caching involves storing frequently accessed data in a faster, more readily available location, such as memory. When a request for data arrives, the system first checks the cache. If the data is found in the cache (a “cache hit”), it is retrieved quickly. If the data is not in the cache (a “cache miss”), it is retrieved from the database, and then stored in the cache for future use.

- Application-Level Caching: Implement caching within your application code. Popular caching libraries include Memcached and Redis.

- Cache frequently accessed data, such as product catalogs, user profiles, and configuration settings.

- Implement cache invalidation strategies to ensure data consistency (e.g., expire cached data after a certain time, or invalidate the cache when data is updated).

- Database Caching: MySQL itself provides caching mechanisms.

- The query cache (deprecated in MySQL 8.0) can cache the results of frequently executed queries. While the query cache is no longer recommended, it is important to understand its functionality in older systems.

- MySQL’s buffer pool caches data and indexes in memory. Properly configuring the buffer pool is crucial for performance.

- Content Delivery Networks (CDNs): For static content (images, CSS, JavaScript), use a CDN to cache content closer to the users, reducing latency.

Monitoring Database Performance and Identifying Bottlenecks

Continuous monitoring is essential to identify and address performance bottlenecks. Monitoring involves collecting metrics and analyzing them to identify areas for improvement.

- Database Monitoring Tools: Use monitoring tools to track key performance indicators (KPIs).

- MySQL Enterprise Monitor: A commercial tool providing comprehensive monitoring and analysis.

- Percona Monitoring and Management (PMM): A free and open-source tool based on Prometheus and Grafana.

- Other Tools: Various other tools and plugins can be used for monitoring.

- Key Performance Indicators (KPIs): Monitor these metrics to identify performance issues.

- Query Execution Time: The time it takes for queries to execute. High execution times indicate slow queries.

- Number of Queries per Second (QPS): The number of queries processed by the database per second.

- Database Load: The load on the database server (e.g., CPU usage, memory usage, disk I/O).

- Slow Query Log: The slow query log records queries that take longer than a specified threshold. Analyze the slow query log to identify slow queries that need optimization.

- Connections: The number of active connections to the database. High connection counts can indicate resource exhaustion.

- Buffer Pool Hit Rate: The percentage of times data is found in the buffer pool. A low hit rate suggests that the buffer pool is too small or that the database is frequently accessing data from disk.

- Identifying Bottlenecks: Analyze the collected data to identify bottlenecks.

- Slow Queries: Identify and optimize slow queries.

- High CPU Usage: Indicates CPU-intensive operations. Optimize queries, add indexes, or consider upgrading the server.

- High Disk I/O: Suggests slow disk access. Optimize queries, ensure indexes are used, or consider faster storage.

- Memory Issues: May require increasing the buffer pool size or optimizing memory usage.

Illustration: Query Optimization Process

The following illustration details a simplified query optimization process.The image depicts a flowchart illustrating the query optimization process, starting with the user’s request and leading to an optimized query.The process begins with a “User Request” represented by a rectangular box. An arrow leads to a decision point represented by a diamond shape labeled “Is Query Slow?”.If the answer is “Yes,” the process continues to the “Analyze Query with EXPLAIN” step (another rectangular box).

An arrow leads from this box to a subsequent decision point labeled “Does Query Use Indexes?”.If the query does not use indexes, the process goes to the “Add Indexes” step (a rectangular box). An arrow then leads to “Test Query” (a rectangular box). If the query uses indexes, the process directly moves to “Test Query”.From “Test Query”, there is a return to the decision point “Is Query Slow?”.

If the answer is “Yes”, the process goes back to “Analyze Query with EXPLAIN”. If the answer is “No”, the process goes to the “Query Optimized” box (a rectangular box), indicating the end of the optimization process.The process has a feedback loop: if a query is slow, the EXPLAIN command is used to diagnose and resolve performance issues. The EXPLAIN command identifies issues like full table scans, lack of indexes, or inefficient join strategies.

If indexes are missing, they are added. The query is then tested to see if the performance has improved. If the query is still slow, the process repeats, allowing for iterative refinement. This illustration shows the importance of monitoring, analyzing, and making changes based on the results.

Security Considerations

Securing a MySQL database is paramount to protect sensitive information and maintain data integrity. Implementing robust security measures is crucial to prevent unauthorized access, data breaches, and malicious attacks. This section Artikels best practices for securing your MySQL database, covering password management, access control, SQL injection prevention, data encryption, backup and recovery procedures, and common security vulnerabilities with their mitigation strategies.

Password Management and Access Control

Effective password management and access control are the first lines of defense against unauthorized database access. This involves creating strong passwords, regularly rotating them, and implementing a well-defined user access policy.

- Password Strength Requirements: Enforce strong password policies to prevent brute-force attacks. Require a minimum password length (e.g., 12 characters), and mandate the use of a combination of uppercase and lowercase letters, numbers, and special characters.

- Password Rotation: Regularly change passwords. Establish a schedule for password rotation (e.g., every 90 days) to minimize the impact of compromised credentials.

- User Privileges: Grant users only the necessary privileges (least privilege principle). Avoid granting excessive permissions, such as `SUPER` or `FILE` privileges, unless absolutely required. Use the `GRANT` and `REVOKE` statements to manage user privileges effectively.

- User Account Management: Regularly review user accounts and permissions. Disable or remove accounts for former employees or users who no longer require database access.

- Authentication Plugins: Consider using more secure authentication plugins, such as `mysql_native_password` or plugins that support two-factor authentication (2FA). MySQL 8.0 and later versions support enhanced authentication methods.

- Secure Connection Protocols: Ensure that all connections to the database are encrypted using SSL/TLS. This prevents eavesdropping and man-in-the-middle attacks.

Preventing SQL Injection Attacks

SQL injection is a common vulnerability that allows attackers to manipulate SQL queries and gain unauthorized access to the database. Implementing proper input validation and using parameterized queries are crucial to prevent SQL injection attacks.

- Input Validation: Always validate user input before using it in SQL queries. Sanitize all input by removing or escaping special characters that could be used to inject malicious SQL code.

- Parameterized Queries (Prepared Statements): Use parameterized queries or prepared statements. These queries separate the SQL code from the data, preventing attackers from injecting malicious code into the query. The database server handles the data securely.

- Stored Procedures: Utilize stored procedures. Stored procedures can encapsulate SQL logic, making it easier to manage and secure database operations. They can also help to prevent SQL injection attacks by validating input.

- Web Application Firewall (WAF): Implement a Web Application Firewall (WAF) to detect and block SQL injection attempts at the application level. WAFs can analyze incoming traffic and identify malicious patterns.

- Regular Security Audits: Conduct regular security audits of your application and database to identify and address potential vulnerabilities. Use automated tools and manual reviews to assess the security posture.

Using Encryption to Protect Sensitive Data

Encrypting sensitive data at rest and in transit is essential to protect it from unauthorized access. MySQL offers several encryption options, including column encryption and SSL/TLS for secure connections.

- Column Encryption: Encrypt sensitive data at the column level using functions like `AES_ENCRYPT()` and `AES_DECRYPT()`. This ensures that the data is protected even if the database is compromised.

- Key Management: Securely manage encryption keys. Store encryption keys separately from the data and use a key management system to protect them.

- SSL/TLS Encryption: Use SSL/TLS encryption to secure the connection between the client and the database server. This prevents eavesdropping and protects data in transit. Configure the MySQL server to require SSL/TLS connections.

- Data Masking: Implement data masking techniques to protect sensitive data in non-production environments. Data masking replaces sensitive data with realistic but non-sensitive values.

- Encryption at the Application Layer: Consider encrypting sensitive data at the application layer before storing it in the database. This provides an additional layer of security.

Configuring Database Backups and Recovery Procedures

Regular backups and well-defined recovery procedures are essential to ensure data availability and prevent data loss. This involves creating a backup strategy, testing the backups, and establishing a recovery plan.

- Backup Strategy: Implement a comprehensive backup strategy that includes both full and incremental backups. Full backups capture the entire database, while incremental backups only capture changes since the last backup.

- Backup Frequency: Determine the appropriate backup frequency based on your Recovery Point Objective (RPO) and Recovery Time Objective (RTO). Back up critical databases more frequently.

- Backup Storage: Store backups securely in a separate location from the primary database server. Consider using offsite storage or cloud-based backup solutions.

- Backup Verification: Regularly verify the integrity of your backups by restoring them to a test environment. This ensures that the backups are valid and can be used for recovery.

- Recovery Plan: Develop a detailed recovery plan that Artikels the steps required to restore the database from a backup. Test the recovery plan regularly to ensure its effectiveness.

- Automated Backups: Automate the backup process using scripts or database management tools. Automation reduces the risk of human error and ensures consistent backups.

Common Security Vulnerabilities and Mitigation Strategies

Identifying and addressing common security vulnerabilities is critical to maintaining a secure database environment. This includes understanding common vulnerabilities and implementing appropriate mitigation strategies.

- SQL Injection:

- Vulnerability: Allowing user input to directly influence SQL queries without proper validation.

- Mitigation: Use parameterized queries, validate and sanitize user input, and implement a Web Application Firewall (WAF).

- Weak Passwords:

- Vulnerability: Using weak or easily guessable passwords.

- Mitigation: Enforce strong password policies, require a minimum password length, and regularly rotate passwords.

- Unsecured Connections:

- Vulnerability: Transmitting data over an unencrypted connection.

- Mitigation: Use SSL/TLS encryption for all connections, and configure the MySQL server to require encrypted connections.

- Lack of Access Control:

- Vulnerability: Granting excessive privileges to users.

- Mitigation: Implement the principle of least privilege, grant users only the necessary permissions, and regularly review user accounts.

- Data Breaches:

- Vulnerability: Unauthorized access to sensitive data.

- Mitigation: Encrypt sensitive data at rest and in transit, implement data masking, and regularly monitor database activity.

- Improper Backup and Recovery:

- Vulnerability: Inadequate backup and recovery procedures.

- Mitigation: Implement a comprehensive backup strategy, regularly test backups, and develop a detailed recovery plan.

- Outdated Software:

- Vulnerability: Running outdated versions of MySQL with known vulnerabilities.

- Mitigation: Regularly update the MySQL server and related software to the latest versions.

- Configuration Errors:

- Vulnerability: Incorrectly configured database settings.

- Mitigation: Review and harden database configuration settings, disable unnecessary features, and regularly audit configurations.

Version Control & Deployment

Managing a MySQL database project effectively requires not only robust coding practices but also a streamlined approach to version control and deployment. These aspects are crucial for maintaining code integrity, facilitating collaboration, and ensuring a smooth transition from development to production environments. This section details how to leverage version control and implement efficient deployment strategies for your database projects.

Using Version Control Systems for Database Schema Changes

Version control systems, particularly Git, are indispensable for managing changes to your database schema. They allow you to track modifications, revert to previous states, and collaborate seamlessly with other developers.To effectively manage database schema changes using Git:

- Initialize a Git repository: Create a Git repository in your project’s root directory to track all changes, including SQL scripts for schema definition.

- Create a dedicated directory for schema scripts: Organize your SQL scripts (e.g., `create_tables.sql`, `alter_table_add_column.sql`) in a separate directory, such as `db/schema`. This keeps your schema definition files distinct from your application code.

- Commit changes frequently: Commit changes to your schema scripts after each logical modification. Use descriptive commit messages that clearly explain the purpose of each change (e.g., “Added index to users table for faster lookups”).

- Branching for feature development: Create branches for new features or major changes to your schema. This allows you to develop and test changes in isolation without affecting the main codebase.

- Merging and conflict resolution: When merging changes from a branch, be prepared to resolve any conflicts that may arise. Git provides tools for merging and resolving conflicts. When conflicts arise, carefully review the changes and choose the correct version of the schema definition.

- Tagging releases: Tag specific commits to mark releases. This allows you to easily revert to a known, stable state of your database schema if needed.

- Using a database migration tool: Consider using database migration tools (e.g., Flyway, Liquibase) to automate schema changes and ensure consistency across environments. These tools integrate well with version control systems and manage the application of schema changes.

Deploying a MySQL Database Project to a Production Environment

Deploying a MySQL database to a production environment requires careful planning and execution to minimize downtime and data loss. The following are key steps:

- Environment setup: Ensure your production environment has the necessary hardware, operating system, and MySQL installation.

- Backup and recovery strategy: Implement a robust backup and recovery strategy to protect your data. This includes regular backups and a plan for restoring data in case of a failure. Common backup methods include logical backups using `mysqldump` and physical backups using tools like Percona XtraBackup.

- Schema deployment: Apply schema changes to the production database. This can be done manually or using a database migration tool.

- Data migration (if needed): If there are any data migrations (e.g., transforming data to fit new schema), execute them after the schema changes.

- Application configuration: Configure your application to connect to the production database. This includes updating connection strings, credentials, and any other environment-specific settings.

- Testing: Thoroughly test your application to ensure it works correctly with the production database.

- Monitoring and logging: Implement monitoring and logging to track database performance and identify potential issues.

Deployment Strategies for MySQL Databases

Different deployment strategies can be used to minimize downtime and risks during database deployments.

- Blue-Green Deployments: This strategy involves having two identical environments: a “blue” environment (current production) and a “green” environment (staging). When deploying a new version, you deploy it to the green environment. After testing, you switch traffic from the blue to the green environment. This minimizes downtime because the old environment remains available in case of issues. If the green environment fails, you can quickly switch back to the blue environment.

- Rolling Updates: This strategy involves updating the database in a rolling fashion. It typically involves updating a subset of database instances at a time, while other instances continue to serve traffic. Once the updated instances are tested, the next subset is updated. This approach is useful for highly available database setups.

- Canary Deployments: This strategy involves deploying a new version of the database to a small subset of users or a small portion of the database infrastructure. This allows you to test the new version in a production environment with minimal impact. If the canary deployment is successful, you can gradually roll out the new version to the entire infrastructure.

Automating Database Deployment

Automating database deployment is crucial for efficiency and repeatability. Scripting or using deployment tools can help streamline the process.

- Scripting: You can use scripting languages like Bash, Python, or SQL scripts to automate the deployment process. These scripts can handle tasks like:

- Creating backups

- Applying schema changes

- Configuring the database

- Running data migrations

- Deployment Tools: Several deployment tools are available, such as:

- Ansible: A configuration management and deployment tool that can automate database deployment tasks.

- Terraform: An infrastructure-as-code tool that can manage the provisioning and configuration of database infrastructure.

- Database Migration Tools (Flyway, Liquibase): These tools automate schema changes and data migrations, which can be integrated into your deployment pipelines.

Flowchart of the Database Deployment Process