Embarking on the journey of “how to coding AI language translation” opens a fascinating world where technology transcends linguistic barriers. This guide will delve into the core principles of AI-powered translation, exploring its evolution from basic systems to sophisticated neural networks. We’ll examine the critical components, data requirements, and the programming languages that fuel these innovative systems, paving the way for a deeper understanding of this transformative field.

From the initial concepts to real-world applications, we’ll unpack the intricacies of AI translation, including neural machine translation (NMT) models and the crucial role of data preparation. We’ll navigate the ethical considerations and challenges, as well as peek into the future of AI translation, exploring emerging trends and their potential impact on global communication.

Introduction to AI Language Translation

AI language translation is a transformative technology enabling the automated conversion of text or speech from one language (the source language) to another (the target language). This process leverages artificial intelligence, specifically machine learning algorithms, to understand the nuances of human language, including grammar, syntax, and semantics. The goal is to produce a translation that is accurate, fluent, and conveys the original meaning effectively.

Brief History of Language Translation

The evolution of language translation has been marked by significant advancements. Early translation efforts relied heavily on human translators, a process often time-consuming and costly. The advent of computers spurred the development of rule-based systems in the mid-20th century, which relied on predefined grammatical rules and dictionaries. However, these systems struggled with the complexities and ambiguities inherent in natural language.The late 20th and early 21st centuries saw the rise of statistical machine translation (SMT), which utilized large datasets of parallel texts (texts in both the source and target languages) to learn translation patterns.

SMT improved accuracy compared to rule-based systems but still faced challenges in handling complex sentence structures and idiomatic expressions. The introduction of neural machine translation (NMT) in the 2010s marked a paradigm shift. NMT systems, based on artificial neural networks, demonstrated a significant leap in translation quality, producing more fluent and accurate translations than previous methods. The evolution continues, with ongoing research focused on improving accuracy, handling low-resource languages, and addressing ethical considerations.

Significance of AI Language Translation in a Globalized World

AI language translation plays a vital role in facilitating communication and collaboration in today’s interconnected world. Its impact is felt across numerous sectors, influencing how individuals, businesses, and cultures interact.

- Communication: AI translation tools break down language barriers, enabling people from different linguistic backgrounds to communicate more easily. This is crucial for international travel, online interactions, and accessing information in multiple languages. For example, real-time translation apps on smartphones allow travelers to converse with locals in their native languages, enhancing travel experiences and fostering cross-cultural understanding.

- Business: In the business world, AI translation facilitates global commerce, marketing, and customer service. Companies can translate websites, product descriptions, and marketing materials to reach international audiences, expanding their market reach and boosting sales. Consider the example of a multinational corporation that uses AI translation to localize its website and customer support resources into dozens of languages, thereby catering to a diverse global customer base.

- Cultural Exchange: AI translation promotes the dissemination of cultural content across linguistic boundaries. Books, movies, and other forms of media can be translated, making them accessible to a wider audience. This helps to preserve and share cultural heritage, fostering greater understanding and appreciation of different cultures. For instance, the availability of translated literature allows readers worldwide to engage with diverse literary traditions, promoting cultural exchange and enriching global perspectives.

Fundamental Components of AI Language Translation Systems

AI language translation systems are complex architectures built upon several key components. These components work in concert to analyze source language text, understand its meaning, and generate accurate translations in the target language. The effectiveness of these systems relies on the interplay of these elements, leveraging advancements in natural language processing, machine learning, and neural networks.

Natural Language Processing (NLP)

NLP forms the foundation of AI language translation. It allows computers to understand, interpret, and generate human language. NLP encompasses a range of techniques and processes crucial for enabling machines to handle text effectively.

- Tokenization: This involves breaking down the input text into individual units, such as words or sub-words (e.g., morphemes). For example, the sentence “The quick brown fox” would be tokenized into [“The”, “quick”, “brown”, “fox”]. This is a foundational step, as it allows the system to work with discrete elements.

- Part-of-Speech (POS) Tagging: POS tagging assigns grammatical tags (e.g., noun, verb, adjective) to each word in a sentence. This helps the system understand the syntactic structure of the sentence. For example, in the sentence “They can fish,” “can” is tagged as a modal verb in one context and a noun in another. This disambiguation is crucial.

- Parsing: Parsing analyzes the grammatical structure of a sentence, creating a parse tree that represents the relationships between words. This helps the system understand the meaning of the sentence.

- Named Entity Recognition (NER): NER identifies and classifies named entities in the text, such as people, organizations, locations, and dates. This information is crucial for accurate translation, especially for proper nouns. For example, the system needs to correctly identify “Paris” as a location and translate it accordingly.

- Sentiment Analysis: Sentiment analysis determines the emotional tone of the text. This helps the system maintain the original sentiment during translation. For example, a positive statement should translate to a positive statement in the target language.

Machine Learning (ML)

Machine learning provides the algorithms and models that enable AI translation systems to learn from data and improve their performance. Different ML approaches are employed.

- Statistical Machine Translation (SMT): SMT models rely on statistical analysis of large parallel corpora (texts in both source and target languages) to learn translation probabilities. SMT systems typically use techniques like n-gram modeling and phrase-based translation.

- Example-Based Machine Translation (EBMT): EBMT systems store a database of previously translated sentences and then find the most similar example to the input sentence. This approach is effective for domains where there are many similar sentences.

- Neural Machine Translation (NMT): NMT utilizes neural networks, particularly deep learning architectures, to learn complex relationships between source and target languages. NMT models are known for their ability to generate more fluent and accurate translations compared to SMT.

Neural Networks

Neural networks, particularly deep learning models, are the driving force behind modern AI language translation. These networks are designed to learn complex patterns and relationships within data.

- Recurrent Neural Networks (RNNs): RNNs, especially LSTMs (Long Short-Term Memory) and GRUs (Gated Recurrent Units), are well-suited for processing sequential data like text. They can capture dependencies between words in a sentence.

- Encoder-Decoder Architectures: A common architecture for NMT involves an encoder that processes the source sentence and creates a contextual representation, and a decoder that generates the translated sentence based on this representation.

- Attention Mechanisms: Attention mechanisms allow the decoder to focus on relevant parts of the source sentence when generating each word of the target sentence. This improves translation accuracy, especially for longer sentences.

- Transformers: Transformers, a type of neural network architecture, have become dominant in NMT. They utilize self-attention mechanisms, allowing the model to weigh the importance of different words in the input sequence. This architecture has proven highly effective and efficient.

Examples of AI Models and Architectures

Several AI models and architectures have been developed for language translation, each with its strengths and weaknesses.

- Google Translate: Google Translate uses a sophisticated NMT model built on the Transformer architecture. It supports a vast number of languages and provides high-quality translations. Its strengths include broad language coverage and good overall accuracy.

- Microsoft Translator: Microsoft Translator also employs NMT models. It integrates with various Microsoft products and services. It is known for its integration capabilities and ability to handle real-time translation.

- DeepL Translator: DeepL Translator utilizes a custom NMT architecture, known for its focus on translation quality and nuance. It is frequently cited for producing very natural-sounding translations. Its strength lies in its high accuracy and fluency.

- Limitations of AI Translation: Despite significant advancements, AI translation systems still have limitations. They can struggle with idiomatic expressions, cultural nuances, and highly technical or specialized language. They may also generate incorrect translations when faced with ambiguous or context-dependent sentences. Over-reliance on AI translation can lead to misunderstandings if the output is not reviewed by a human.

Data and Training for AI Language Translation

The performance of any AI language translation system hinges significantly on the data used for training. The quality, quantity, and diversity of this data directly impact the accuracy, fluency, and overall effectiveness of the translation models. This section explores the crucial role of data in training, the techniques used for data preparation, and methods for evaluating the performance of these models.

Types of Data Used in Training

AI language translation models rely on several types of data to learn the nuances of language and perform accurate translations.

- Parallel Corpora: These are collections of text in two or more languages, where each sentence or segment has its corresponding translation in the other languages. They are the cornerstone of training for most translation models. The larger and more diverse the parallel corpus, the better the model’s ability to learn complex linguistic patterns and translate accurately. Examples include:

- The Europarl corpus, comprising parliamentary proceedings from the European Union.

- The United Nations corpus, containing official documents from the United Nations.

- OpenSubtitles, which contains movie and TV show subtitles in numerous languages.

- Monolingual Text: This type of data includes text in a single language. It’s used to enhance the model’s understanding of the language’s grammar, vocabulary, and style. Monolingual data is especially useful for improving fluency and naturalness in the translated output. Examples include:

- Large collections of news articles.

- Books and literary works.

- Web pages.

- Back-translation Data: This involves translating monolingual data from the target language back into the source language. This process helps to create additional parallel data, expanding the training set and improving the model’s ability to handle diverse sentence structures and vocabulary.

- Multilingual Data: In cases where a model needs to translate between multiple languages, multilingual datasets are used. These datasets contain text in several languages, allowing the model to learn relationships between many language pairs simultaneously.

Data Preparation, Cleaning, and Pre-processing Techniques

Data preparation is a critical step in training AI translation models. The quality of the training data directly influences the model’s performance. This process involves several stages to ensure the data is clean, consistent, and suitable for training.

- Data Collection: The first step involves gathering data from various sources, such as publicly available datasets, web scraping, or APIs. It’s important to consider the size, domain, and language coverage of the data.

- Data Cleaning: This involves removing irrelevant or noisy data that can negatively impact the model’s performance. Cleaning includes:

- Removing or correcting typographical errors and inconsistencies.

- Eliminating duplicate entries.

- Handling special characters and encoding issues.

- Filtering out sentences that are too short or too long, as they might not be representative of typical language usage.

- Data Pre-processing: This step transforms the cleaned data into a format suitable for training. Pre-processing techniques include:

- Tokenization: Breaking down text into individual words or sub-word units (tokens). This can be done using techniques such as word-based tokenization or sub-word tokenization (e.g., Byte Pair Encoding, BPE).

- Normalization: Converting text to a consistent format. This may involve:

- Lowercasing all text.

- Removing punctuation.

- Standardizing numbers and dates.

- Sentence Segmentation: Dividing the text into individual sentences, which is important for training sequence-to-sequence models.

- Vocabulary Creation: Building a vocabulary of unique tokens from the training data.

- Data Augmentation: This involves creating new training data from existing data to increase the size and diversity of the dataset. Techniques include:

- Back-translation.

- Adding noise to the input text.

- Replacing words with synonyms.

Methods for Evaluating the Performance of AI Translation Models

Evaluating the performance of AI translation models is essential to assess their accuracy, fluency, and overall quality. Several metrics and evaluation methods are used to gauge the effectiveness of these models.

- BLEU (Bilingual Evaluation Understudy): This is one of the most widely used metrics for evaluating machine translation. It measures the similarity between the machine-translated output and a set of reference translations. BLEU considers n-gram precision and brevity penalty.

BLEU Score = BP

– exp(sum(w_n

– log(p_n)))where:

- BP = Brevity Penalty (penalizes translations shorter than the references).

- w_n = Weight for n-gram precision.

- p_n = Precision of n-grams (how many n-grams in the machine translation appear in the reference translations).

BLEU scores range from 0 to 1, with higher scores indicating better translation quality.

- METEOR (Metric for Evaluation of Translation with Explicit Ordering): This metric addresses some of the limitations of BLEU by incorporating word stemming, synonyms, and word order. It calculates the harmonic mean of precision and recall, with a penalty for word mismatches.

METEOR Score = (1 – Penalty)

- (Precision

- Recall) / (beta

- Precision + Recall)

where:

- Penalty accounts for the number of word matches that are out of order.

- beta is a parameter.

METEOR scores also range from 0 to 1, with higher scores indicating better translation quality.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): This metric is commonly used in text summarization, but can also be applied to machine translation. ROUGE measures the overlap of n-grams, word sequences, and word pairs between the machine-translated output and the reference translations. Different variants of ROUGE exist, such as ROUGE-N, ROUGE-L, and ROUGE-SU.

- Human Evaluation: This involves human annotators assessing the quality of the translations based on fluency, adequacy, and overall meaning preservation. Human evaluation is often considered the gold standard, as it provides a more nuanced understanding of the translation quality. It can be done using:

- Direct Assessment: Humans rate the quality of the translation on a scale.

- Ranking: Humans rank different translations of the same sentence based on quality.

- Error Analysis: Humans identify and categorize errors in the translations (e.g., grammatical errors, word choice errors, meaning errors).

- Other Metrics: Additional metrics and methods are used depending on the specific requirements and characteristics of the translation task:

- TER (Translation Edit Rate): Measures the number of edits needed to transform the machine-translated output into a reference translation.

- CDER (Character-based Distinctiveness of Errors Rate): Focuses on character-level errors.

- Task-Specific Evaluation: If the translation is used for a specific task (e.g., question answering), the evaluation focuses on the task’s performance using the translated text.

Neural Machine Translation (NMT) Explained

Neural Machine Translation (NMT) has revolutionized the field of language translation, offering significant improvements over traditional statistical methods. NMT models leverage artificial neural networks to translate text from one language to another. This section delves into the architecture, components, and advancements within NMT, providing a comprehensive understanding of its inner workings and performance.

Architecture of NMT Models

NMT models typically employ an encoder-decoder architecture. This structure is designed to encode the input sequence (source language) into a dense vector representation and then decode this representation to generate the output sequence (target language). This process is fundamental to how NMT systems function.

- Encoder: The encoder processes the input sequence word by word, creating a contextualized representation of the entire input sentence. This representation, often called a “context vector” or “thought vector,” captures the meaning and relationships between words. This vector is then passed to the decoder.

- Decoder: The decoder takes the context vector from the encoder and generates the output sequence, word by word, in the target language. The decoder’s task is to “unravel” the encoded information and translate it into the desired language.

- Recurrent Neural Networks (RNNs): Initially, RNNs, particularly LSTMs (Long Short-Term Memory) and GRUs (Gated Recurrent Units), were the dominant choice for both the encoder and decoder. These networks are well-suited for processing sequential data like text because they maintain an internal state that allows them to remember information from previous time steps. They process the input sequentially, updating their internal state at each step.

However, RNNs can struggle with long-range dependencies in the input sequence.

- Attention Mechanisms: To address the limitations of RNNs, attention mechanisms were introduced. Attention allows the decoder to focus on different parts of the input sequence when generating each word of the output sequence. This is achieved by assigning different weights to the encoder’s hidden states. Higher weights indicate that a particular part of the input sequence is more relevant to the current output word.

- Transformers: Transformers have become the state-of-the-art architecture for NMT. Transformers are based entirely on attention mechanisms, eliminating the need for recurrence. They use “self-attention” to relate different words in the input sequence to each other and “encoder-decoder attention” to connect the encoder and decoder. This architecture allows for parallel processing of the input sequence, leading to faster training and better performance.

Comparative Analysis of NMT Architectures

Different NMT architectures exhibit varying strengths and weaknesses. A comparative analysis highlights the trade-offs involved in choosing a particular architecture.

| Architecture | Advantages | Disadvantages |

|---|---|---|

| RNNs (LSTMs/GRUs) |

|

|

| CNNs |

|

|

| Transformers |

|

|

Attention Mechanisms and Translation Quality

Attention mechanisms significantly enhance translation quality by enabling the model to focus on relevant parts of the input sequence. The mechanism computes a weighted sum of the encoder hidden states, where the weights are determined by the alignment between the decoder’s current state and each encoder hidden state.

- How Attention Works: For each word the decoder generates, the attention mechanism calculates an attention score for each word in the input sequence. This score represents how relevant each input word is to the current output word. These scores are then normalized using a softmax function to create a probability distribution. The encoder hidden states are then weighted by these probabilities and summed to produce a context vector.

- Impact on Translation: Attention allows the model to address the following issues.

- Handling Long Sentences: Attention helps the model to translate long sentences accurately by allowing it to focus on the most important parts of the input.

- Word Alignment: Attention implicitly learns word alignments between the source and target languages. This means that the model learns which words in the source language correspond to which words in the target language.

- Improved Accuracy: Attention-based models often produce more accurate and fluent translations compared to models without attention.

- Example: Consider the sentence “The cat sat on the mat.” When translating “mat,” the attention mechanism would likely assign high weights to the hidden states corresponding to “mat,” “on,” and “the” in the source sentence. This allows the decoder to accurately translate “mat” based on its relationship with the surrounding words.

Programming Languages and Libraries for AI Translation

Developing AI language translation systems requires a robust toolkit of programming languages and specialized libraries. The choice of these tools significantly impacts the development process, from data preprocessing and model building to training and deployment. This section explores the key programming languages and libraries that empower developers in the field of AI translation.

Popular Programming Languages for AI Translation

Python is the dominant language in AI language translation, owing to its versatility, extensive libraries, and large community support. Its readability and ease of use make it ideal for rapid prototyping and complex model development.Other languages are also employed, often for specific tasks or in conjunction with Python:

- Python: The primary language, favored for its simplicity, rich ecosystem of libraries (like TensorFlow and PyTorch), and ease of use. It is utilized for nearly every aspect of AI translation, including data manipulation, model building, training, and evaluation. Its dynamic typing and interpreted nature facilitate rapid development and experimentation.

- C++: Used for performance-critical components, especially in production environments. Libraries like TensorFlow and PyTorch offer C++ APIs, allowing developers to optimize model inference and reduce latency. C++’s speed and efficiency make it suitable for deploying trained models in resource-constrained environments.

- Java: Employed in enterprise-level translation systems and for integration with existing Java-based infrastructure. Java’s platform independence and strong ecosystem for software development make it a viable option for large-scale translation projects.

- JavaScript: Useful for building web-based translation interfaces and incorporating translation capabilities into web applications. Libraries like TensorFlow.js enable running trained models directly in the browser, offering fast and accessible translation services.

Essential Libraries and Frameworks for AI Translation

Several libraries and frameworks are essential for building AI language translation systems. These tools provide pre-built functionalities for various tasks, such as data preprocessing, model building, and training. Choosing the right libraries is crucial for efficiency and scalability.Here’s a list of essential libraries and frameworks:

- TensorFlow: An open-source machine learning framework developed by Google. TensorFlow is widely used for building and training complex neural networks, including those for translation. It provides a flexible architecture for defining and executing computational graphs, allowing developers to experiment with different model architectures and training strategies.

- PyTorch: Another popular open-source machine learning framework, developed by Facebook’s AI Research lab. PyTorch offers a more Pythonic and intuitive interface, making it easier to prototype and debug models. It’s particularly well-suited for research and development, with its dynamic computational graphs providing flexibility in model design.

- Transformers (Hugging Face): A library providing pre-trained models and tools for natural language processing tasks, including translation. It simplifies the implementation of state-of-the-art transformer-based models like BERT, GPT, and T5. Using pre-trained models can significantly reduce training time and improve performance.

- NLTK (Natural Language Toolkit): A comprehensive library for natural language processing tasks, including tokenization, stemming, and part-of-speech tagging. NLTK is useful for preprocessing text data and preparing it for use in translation models.

- spaCy: An advanced NLP library designed for production use. It provides fast and accurate tokenization, part-of-speech tagging, named entity recognition, and dependency parsing. spaCy is useful for building robust and efficient text processing pipelines.

- Moses: A statistical machine translation system that is still relevant for some legacy systems or for comparison. It provides tools for data preparation, model training, and decoding. While neural machine translation has become dominant, Moses remains a useful tool for understanding and comparing different approaches to translation.

Code Examples for Building a Simple AI Translation Model

This section provides code examples illustrating how to use TensorFlow and PyTorch to build and train a simple AI translation model. These examples provide a starting point for understanding the core concepts and implementing translation models. TensorFlow Example:This example demonstrates a basic sequence-to-sequence model using TensorFlow. It uses a simplified dataset and focuses on illustrating the fundamental components.“`pythonimport tensorflow as tfimport numpy as np# 1.

Data Preparation (Simplified)# Assume we have pairs of English and French sentencesenglish_sentences = [“hello”, “world”, “goodbye”]french_sentences = [“bonjour”, “monde”, “au revoir”]# Create vocabulary (simplified – for demonstration)en_vocab = word: index for index, word in enumerate(set(english_sentences))fr_vocab = word: index for index, word in enumerate(set(french_sentences))en_vocab[‘

fr_vocab[‘

# Convert sentences to numerical representations

def sentence_to_indices(sentence, vocab, max_length=5):

indices = [vocab.get(word, vocab[‘

indices = indices[:max_length] # Truncate

indices += [vocab[‘

– (max_length – len(indices)) # Pad

return indices

en_indices = np.array([sentence_to_indices(s, en_vocab) for s in english_sentences])

fr_indices = np.array([sentence_to_indices(s, fr_vocab) for s in french_sentences])

# 2. Model Definition (Simplified Sequence-to-Sequence)

embedding_dim = 4

units = 8 # Number of units in the LSTM layers

model = tf.keras.models.Sequential([

tf.keras.layers.Embedding(len(en_vocab), embedding_dim, input_length=5),

tf.keras.layers.LSTM(units),

tf.keras.layers.RepeatVector(5),

tf.keras.layers.LSTM(units, return_sequences=True),

tf.keras.layers.Dense(len(fr_vocab), activation=’softmax’)

])

# 3. Model Compilation

model.compile(optimizer=’adam’, loss=’sparse_categorical_crossentropy’, metrics=[‘accuracy’])

# 4. Model Training

# Reshape fr_indices for the loss function (sparse_categorical_crossentropy)

fr_indices_reshaped = np.expand_dims(fr_indices, -1)

model.fit(en_indices, fr_indices_reshaped, epochs=10)

# 5. Prediction (Inference)

def predict_translation(sentence, en_vocab, fr_vocab, model):

indices = sentence_to_indices(sentence, en_vocab)

input_data = np.array([indices])

predictions = model.predict(input_data)

predicted_indices = np.argmax(predictions, axis=-1)[0]

reverse_fr_vocab = index: word for word, index in fr_vocab.items()

translated_sentence = ‘ ‘.join([reverse_fr_vocab[i] for i in predicted_indices])

return translated_sentence

print(predict_translation(“hello”, en_vocab, fr_vocab, model))

“`

PyTorch Example:

This example demonstrates a similar sequence-to-sequence model using PyTorch. It illustrates the key steps involved in building and training a translation model using PyTorch’s functionalities.

“`python

import torch

import torch.nn as nn

import torch.optim as optim

import numpy as np

# 1. Data Preparation (Simplified)

-same as TensorFlow example

english_sentences = [“hello”, “world”, “goodbye”]

french_sentences = [“bonjour”, “monde”, “au revoir”]

en_vocab = word: index for index, word in enumerate(set(english_sentences))

fr_vocab = word: index for index, word in enumerate(set(french_sentences))

en_vocab[‘

fr_vocab[‘

def sentence_to_indices(sentence, vocab, max_length=5):

indices = [vocab.get(word, vocab[‘

indices = indices[:max_length] # Truncate

indices += [vocab[‘

– (max_length – len(indices)) # Pad

return indices

en_indices = torch.tensor([sentence_to_indices(s, en_vocab) for s in english_sentences])

fr_indices = torch.tensor([sentence_to_indices(s, fr_vocab) for s in french_sentences])

# 2. Model Definition (Simplified Sequence-to-Sequence)

class Seq2Seq(nn.Module):

def __init__(self, en_vocab_size, fr_vocab_size, embedding_dim, hidden_dim):

super(Seq2Seq, self).__init__()

self.embedding = nn.Embedding(en_vocab_size, embedding_dim)

self.lstm = nn.LSTM(embedding_dim, hidden_dim, batch_first=True)

self.linear = nn.Linear(hidden_dim, fr_vocab_size)

def forward(self, input_seq):

embedded = self.embedding(input_seq)

lstm_out, _ = self.lstm(embedded)

output = self.linear(lstm_out)

return output

embedding_dim = 4

hidden_dim = 8

model = Seq2Seq(len(en_vocab), len(fr_vocab), embedding_dim, hidden_dim)

# 3. Loss Function and Optimizer

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters())

# 4. Model Training

epochs = 10

for epoch in range(epochs):

optimizer.zero_grad()

output = model(en_indices)

# Reshape target for CrossEntropyLoss

loss = criterion(output.reshape(-1, len(fr_vocab)), fr_indices.reshape(-1))

loss.backward()

optimizer.step()

print(f’Epoch epoch+1, Loss: loss.item()’)

# 5. Prediction (Inference)

def predict_translation(sentence, en_vocab, fr_vocab, model):

model.eval() # Set the model to evaluation mode

with torch.no_grad():

indices = sentence_to_indices(sentence, en_vocab)

input_data = torch.tensor([indices])

output = model(input_data)

predicted_indices = torch.argmax(output, dim=2)[0].tolist()

reverse_fr_vocab = index: word for word, index in fr_vocab.items()

translated_sentence = ‘ ‘.join([reverse_fr_vocab[i] for i in predicted_indices])

return translated_sentence

print(predict_translation(“hello”, en_vocab, fr_vocab, model))

“`

These simplified examples provide a basic understanding of how to build and train AI translation models using TensorFlow and PyTorch. Real-world applications would involve more sophisticated architectures, larger datasets, and more advanced techniques like attention mechanisms and transformer models. These examples are designed to demonstrate the fundamental principles.

The key takeaways from these examples are:

- Data Preparation: Converting text data into numerical representations (indices) that can be processed by the models.

- Model Definition: Defining the model architecture using layers like embeddings, LSTM (or other recurrent/transformer layers), and dense layers.

- Training Loop: Iterating through the data, calculating the loss, and updating the model’s parameters using an optimizer.

- Prediction: Using the trained model to translate new input sentences.

Advanced Techniques in AI Language Translation

Advancements in AI language translation continuously push the boundaries of accuracy, fluency, and adaptability. These techniques leverage sophisticated methodologies to address the inherent complexities of natural language, including nuanced grammar, idiomatic expressions, and cultural context. This section explores several key advanced techniques that contribute significantly to the performance and capabilities of modern AI translation systems.

Transfer Learning and Fine-tuning

Transfer learning is a crucial technique that leverages pre-trained models to accelerate and improve the performance of new tasks. This approach is particularly valuable in AI language translation.

The process involves:

- Pre-training: A large, general-purpose language model is trained on a massive corpus of text data. This pre-training phase allows the model to learn fundamental language patterns, grammar rules, and semantic relationships. Examples of models used include BERT, RoBERTa, and Transformer-based architectures.

- Fine-tuning: Once pre-trained, the model is fine-tuned on a smaller, task-specific dataset, such as a parallel corpus of translated sentences. This process adapts the pre-trained model to the nuances of the specific language pair or domain.

This strategy offers several benefits:

- Reduced Training Time: Fine-tuning a pre-trained model is significantly faster than training a model from scratch.

- Improved Performance: Transfer learning often leads to higher translation accuracy and fluency, especially when dealing with limited data.

- Data Efficiency: Pre-trained models can achieve good results with smaller datasets, which is particularly useful for low-resource languages.

For instance, consider translating English to French. A pre-trained model like BERT, trained on a vast English and French dataset, can be fine-tuned on a specific English-French parallel corpus related to medical terminology. This fine-tuning process allows the model to adapt its understanding of language to the specialized vocabulary and sentence structures prevalent in medical texts, resulting in more accurate and relevant translations.

Approaches for Low-Resource Languages

Low-resource languages, those with limited available data for training translation models, present a significant challenge. Several strategies are employed to overcome these limitations.

Techniques include:

- Data Augmentation: This involves creating synthetic training data to augment the existing limited dataset. Techniques include back-translation (translating from the target language back to the source language), paraphrasing, and adding noise to the data.

- Multilingual Models: Training a single model to translate between multiple languages simultaneously. This approach allows the model to leverage knowledge from high-resource languages to improve the translation quality of low-resource languages.

- Cross-lingual Transfer Learning: Leveraging pre-trained models trained on high-resource languages and transferring the learned knowledge to low-resource languages. This is similar to transfer learning but focuses on cross-lingual applications.

- Semi-Supervised Learning: Utilizing both labeled (parallel sentences) and unlabeled (monolingual) data. This technique allows the model to learn from a broader range of data, even without direct translation pairs.

- Zero-Shot Translation: Training models that can translate between language pairs not seen during training. This is achieved by leveraging the shared representations learned from other language pairs.

Consider the translation of Swahili (a low-resource language) to English. Data augmentation might involve back-translating Swahili text from English using a pre-trained model. Multilingual models, trained on English, French, and Swahili, could use the relationships learned between English and French to aid the translation between Swahili and English.

Incorporating Context and Domain-Specific Knowledge

Integrating context and domain-specific knowledge is crucial for improving the accuracy and relevance of AI language translation. This involves equipping models with the ability to understand the meaning of words and phrases within their context and to adapt to the specific vocabulary and style of a given domain.

Methods for incorporating context and domain-specific knowledge include:

- Contextual Word Embeddings: Using word embeddings that capture the meaning of words based on their surrounding context. Techniques like BERT and ELMo generate different embeddings for the same word depending on the sentence.

- Domain Adaptation: Fine-tuning models on domain-specific data to adapt them to the vocabulary, style, and nuances of a particular field, such as medical, legal, or technical domains.

- External Knowledge Integration: Incorporating external knowledge sources, such as knowledge graphs, ontologies, and dictionaries, to provide additional information about words and concepts.

- Attention Mechanisms: Using attention mechanisms to focus on the most relevant parts of the source sentence when generating the target sentence. This helps the model understand the relationships between words and phrases.

- Reinforcement Learning: Employing reinforcement learning to train translation models. This allows the model to learn from feedback and optimize its translations based on specific evaluation metrics.

For example, when translating a medical report from English to Spanish, a domain-adapted model fine-tuned on medical literature would be better equipped to handle medical terminology and specialized sentence structures. Furthermore, integrating a medical knowledge graph could provide the model with definitions and relationships between medical concepts, leading to more accurate and informative translations.

Real-World Applications of AI Language Translation

AI language translation has revolutionized how we interact with information and each other across linguistic barriers. From instantly translating messages to providing accessible information for global audiences, its impact is felt across numerous industries and daily activities. This section will delve into specific applications, demonstrating the breadth and depth of AI translation’s influence.

Machine Translation of Websites

Websites are increasingly accessible to global audiences through AI-powered translation. This capability allows businesses, organizations, and individuals to reach a wider audience, fostering greater understanding and communication.

- Website Localization: AI translation tools automatically translate website content, including text, images, and videos, into multiple languages. This allows businesses to tailor their websites to specific regions and cultures. For example, an e-commerce company can automatically translate product descriptions, customer reviews, and website navigation to cater to customers in various countries.

- Dynamic Content Translation: AI can translate dynamic content, such as news articles, blog posts, and social media feeds, in real-time. This ensures that the latest information is accessible to a global audience as soon as it’s published. News websites leverage this capability to provide up-to-the-minute translations of articles from various sources.

- Multilingual : AI-powered translation helps optimize websites for search engines in multiple languages. By translating website content and metadata, businesses can improve their visibility in search results for different language-specific queries. This includes translating s, page titles, and descriptions.

Instant Messaging

AI language translation has broken down communication barriers in instant messaging platforms, enabling real-time conversations between individuals who speak different languages. This feature is seamlessly integrated into popular messaging apps.

- Real-Time Chat Translation: Users can translate messages in real-time as they chat with others who speak different languages. The translation happens instantly, allowing for a fluid conversation. This is commonly seen in apps like WhatsApp, Telegram, and Signal, which have integrated translation features.

- Group Chat Translation: Translation features can be applied to group chats, enabling all participants, regardless of their native language, to understand the conversation. This facilitates collaboration and information sharing in international teams or diverse social groups.

- Voice Translation: Some messaging apps offer voice translation capabilities. Users can speak in their native language, and the app translates their speech into the recipient’s language in real-time, and vice-versa.

Document Translation

Document translation is a vital application of AI translation, facilitating the exchange of information across different languages in various professional and academic settings.

- Business Documents: AI translation tools are used to translate contracts, reports, presentations, and other business documents. This streamlines international business operations, enabling companies to communicate effectively with global partners and clients.

- Academic Papers: Researchers and academics use AI translation to translate scientific papers, research reports, and publications, allowing them to share their work and access information from a global research community.

- Legal Documents: AI translation aids in the translation of legal documents such as contracts, court filings, and other legal materials, ensuring accurate and timely communication in legal proceedings involving multiple languages.

AI Translation in Business

AI translation significantly impacts business operations, improving communication, expanding market reach, and enhancing customer service.

- Market Expansion: Businesses can use AI translation to translate marketing materials, websites, and product descriptions, expanding their reach to new international markets. This allows companies to tap into a broader customer base and increase revenue.

- Customer Support: AI-powered chatbots and translation tools enable businesses to provide customer support in multiple languages. This enhances customer satisfaction and improves the efficiency of support teams. For instance, a company can use AI to translate customer inquiries in real-time and provide support in the customer’s preferred language.

- Global Collaboration: AI translation facilitates effective communication and collaboration within international teams. This allows employees from different countries to work together on projects, share information, and make decisions efficiently.

AI Translation in Education

AI translation is transforming the education sector by improving access to educational resources and promoting global learning.

- Multilingual Learning Materials: AI translation enables the creation of educational materials in multiple languages, making them accessible to students worldwide. This includes translating textbooks, online courses, and other educational resources.

- Language Learning Tools: AI-powered language learning apps use translation to help students understand new words and phrases, practice their pronunciation, and improve their overall language skills.

- International Collaboration: AI translation supports collaboration between students and educators from different countries. Students can work together on projects, share ideas, and learn from each other’s experiences.

AI Translation in Healthcare

The healthcare industry leverages AI translation to improve patient care, enhance communication, and facilitate research.

- Patient Communication: AI translation tools help healthcare providers communicate with patients who speak different languages. This ensures that patients understand their diagnoses, treatment plans, and instructions, leading to better health outcomes.

- Medical Record Translation: AI translation assists in translating medical records, patient histories, and other healthcare documents, facilitating the exchange of information between healthcare providers across different language barriers.

- Medical Research: AI translation helps researchers access and analyze medical research from around the world. This accelerates the discovery of new treatments and improves healthcare practices globally.

Benefits and Limitations of AI Translation

While AI translation offers numerous benefits, it also has limitations that must be considered.

- Benefits:

- Speed and Efficiency: AI translation can translate large volumes of text quickly and efficiently, saving time and resources compared to human translation.

- Cost-Effectiveness: AI translation is generally more affordable than human translation, making it accessible to a wider range of users.

- Accessibility: AI translation makes information available to a global audience, breaking down language barriers and promoting understanding.

- Limitations:

- Accuracy: AI translation can sometimes produce inaccurate or awkward translations, particularly for complex or nuanced text.

- Cultural Sensitivity: AI translation may not always capture the cultural context and subtleties of the original text, leading to misunderstandings.

- Ethical Considerations: Concerns exist about the potential for AI translation to be used to spread misinformation or bias. It’s crucial to address ethical considerations and ensure responsible use.

Ethical Considerations and Challenges

AI language translation, while offering remarkable advancements, presents significant ethical considerations that must be addressed to ensure responsible development and deployment. These challenges span bias, privacy, and the potential for misuse, necessitating careful consideration of their impact on individuals and society. The responsible development and deployment of these systems requires a proactive approach to mitigate potential harms and promote fairness.

Bias in Translation Outcomes

Bias in AI language translation can manifest in various forms, leading to unfair or discriminatory outcomes. This bias can stem from the data used to train the models, reflecting existing societal prejudices, or from the model’s inherent design. The consequences of biased translations can be far-reaching, affecting communication, access to information, and the perpetuation of stereotypes.

- Data Bias: Training datasets often reflect societal biases present in the source text. For example, if a dataset contains predominantly male pronouns associated with certain professions, the translation model may incorrectly assign male pronouns to those professions in the target language, even if the original text is gender-neutral. This perpetuates gender stereotypes.

- Algorithmic Bias: The algorithms themselves can contribute to bias. If the model is trained on data that over-represents certain groups or perspectives, it may learn to favor those groups in its translations. This can lead to skewed results, such as misrepresenting the views or experiences of underrepresented communities.

- Example: Consider a scenario where a translation model is used to translate news articles. If the training data predominantly features positive portrayals of one ethnic group and negative portrayals of another, the model may inadvertently translate news articles in a way that reinforces these biases. This could lead to the unfair vilification of one group or the unearned glorification of another.

- Consequences: Biased translations can contribute to the spread of misinformation, the reinforcement of stereotypes, and the erosion of trust in AI systems. They can also lead to discriminatory outcomes in areas such as job applications, legal proceedings, and healthcare.

Strategies to Mitigate Bias in AI Translation Models

Addressing bias in AI translation models requires a multi-faceted approach that encompasses data diversity, model fairness, and transparency. These strategies aim to create more equitable and reliable translation systems.

- Data Diversity: Ensuring the training data is diverse and representative of various demographics, perspectives, and linguistic styles is crucial. This involves curating datasets that include a wide range of voices and experiences, actively seeking out and incorporating underrepresented groups, and regularly auditing the data for potential biases.

- Model Fairness: Implementing techniques to mitigate bias within the model itself is essential. This can include:

- Adversarial Training: Training the model to be robust against adversarial attacks that expose biases.

- Fairness-Aware Algorithms: Designing algorithms that explicitly consider fairness metrics during training.

- Bias Detection and Mitigation Techniques: Employing techniques to identify and address biases within the model’s architecture and parameters.

- Transparency: Promoting transparency in the development and deployment of AI translation models is vital. This involves:

- Explainable AI (XAI): Developing methods to make the model’s decision-making process more transparent and understandable.

- Model Documentation: Providing detailed documentation about the data, algorithms, and limitations of the model.

- Regular Audits: Conducting regular audits to assess the model’s performance and identify potential biases.

- Human Oversight: Implementing human oversight in the translation process can help to identify and correct biased translations. This involves having human reviewers review and edit the translations produced by the AI model, especially for sensitive content.

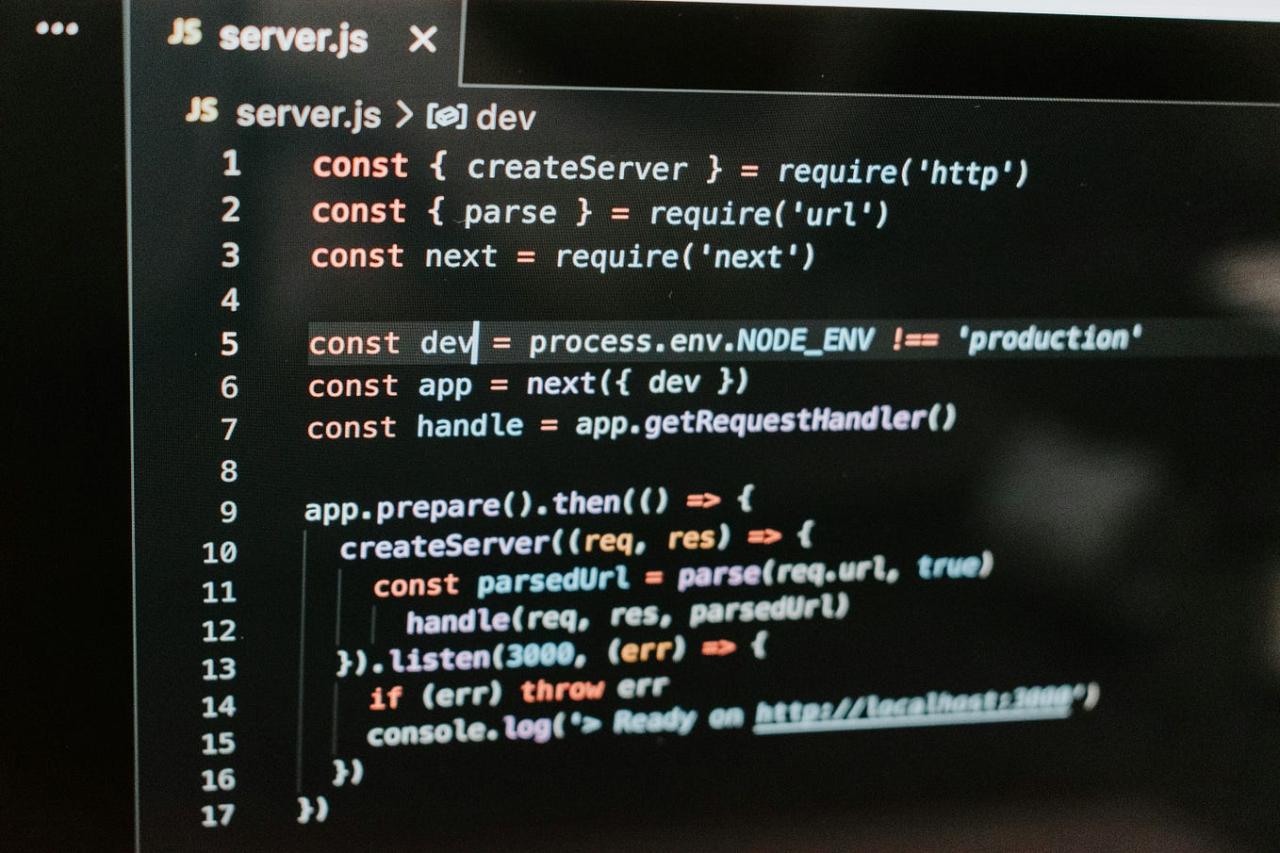

Future Trends in AI Language Translation

![[200+] Coding Backgrounds | Wallpapers.com [200+] Coding Backgrounds | Wallpapers.com](https://teknowise.web.id/wp-content/uploads/2025/10/coding-1024x836-3.jpg)

The field of AI language translation is dynamic and rapidly evolving. As technology advances, we can anticipate significant shifts in how language translation is performed and utilized. These advancements will impact not only the technical aspects of translation but also its integration with other technologies and its role in global communication.

More Sophisticated Models

The development of more sophisticated models is a key trend in AI language translation. This involves enhancing existing architectures and exploring new approaches to improve accuracy, fluency, and context understanding.

- Transformer Architectures: Transformer models, which have become the dominant architecture in NMT, will continue to be refined. Research focuses on:

- Increasing model size and complexity to capture more nuanced linguistic patterns.

- Optimizing training methods to improve efficiency and reduce computational costs.

- Developing more efficient attention mechanisms to handle long-range dependencies in text.

- Multimodal Translation: The integration of different data modalities, such as text, images, and audio, is gaining traction. This approach aims to:

- Enable translation based on visual and auditory cues, leading to a richer understanding of context.

- Improve the accuracy of translation in situations where text alone is insufficient.

- Facilitate translation of sign languages and other non-textual communication forms.

- Few-Shot and Zero-Shot Translation: These approaches aim to translate between languages with limited or no training data. This is crucial for:

- Expanding the reach of AI translation to less-resourced languages.

- Reducing the need for extensive and expensive data collection efforts.

- Enabling rapid deployment of translation capabilities in new language pairs.

Integration of AI with Other Technologies

AI language translation is increasingly integrated with other technologies, expanding its applications and capabilities. This integration is poised to transform various aspects of human interaction and global communication.

- Augmented Reality (AR): AR applications will leverage AI translation to provide real-time translation of text and speech in the user’s environment.

- Imagine a tourist using AR glasses to instantly translate signs, menus, and conversations in a foreign country.

- Businesses can use AR to provide translated instructions and product information in real-time.

- Virtual Reality (VR): VR environments will use AI translation to enable immersive multilingual experiences.

- VR games and simulations can offer localized content and interactions, allowing users to communicate with others regardless of their native language.

- VR training programs can provide translated instructions and feedback in real-time.

- Edge Computing: Deploying AI translation models on edge devices (e.g., smartphones, smart speakers) will enable:

- Faster translation speeds and reduced latency, as the processing is done locally.

- Improved privacy, as sensitive data does not need to be sent to the cloud.

- Offline translation capabilities, allowing users to translate even without an internet connection.

- Robotics: Robots equipped with AI translation capabilities will be able to understand and respond to commands in multiple languages.

- This has significant implications for manufacturing, healthcare, and customer service, allowing robots to interact effectively with a diverse range of people.

Evolution of AI Translation

AI translation is poised to significantly impact human communication and global interactions, fostering greater understanding and collaboration.

- Breaking Down Language Barriers: AI translation will continue to improve, making it easier for people to communicate across language barriers. This will facilitate:

- International collaborations in research, business, and culture.

- Access to information and knowledge from around the world.

- Increased opportunities for cultural exchange and understanding.

- Personalized Translation: AI translation will become more personalized, adapting to individual user preferences and communication styles.

- This will involve tailoring translations to the user’s level of language proficiency, vocabulary, and cultural background.

- AI can learn from user feedback to improve translation accuracy and fluency over time.

- The Rise of Real-Time Translation: Real-time translation will become more seamless and ubiquitous.

- Applications like simultaneous interpretation in conferences and meetings will become more accessible.

- Real-time translation in video calls and social media platforms will foster instant communication.

Visualization of Future Trends

Here is a descriptive diagram illustrating the integration of AI translation with augmented reality, virtual reality, and other emerging technologies:

A circular diagram with AI Language Translation at the center. Radiating outwards from the center are arrows pointing to different technologies and applications.

* Center: AI Language Translation.

– Arrows and Associated Technologies:

– Augmented Reality (AR): An arrow pointing from AI Translation to a graphic of a person wearing AR glasses, with translated text overlayed on the real-world environment.

– Virtual Reality (VR): An arrow pointing from AI Translation to a graphic of a person wearing a VR headset, engaged in a virtual environment where conversations and interfaces are translated in real time.

– Edge Computing: An arrow pointing from AI Translation to a graphic of a smartphone, with a globe icon indicating global connectivity and real-time translation capabilities.

– Robotics: An arrow pointing from AI Translation to a graphic of a robot interacting with people in multiple languages, suggesting its ability to understand and respond to various languages.

– Multimodal Translation: An arrow pointing from AI Translation to a graphic showing integrated text, images, and audio, representing the capability to process different types of data for translation.

– Few-Shot/Zero-Shot Translation: An arrow pointing from AI Translation to a world map highlighting various language groups, indicating the potential for translation across many languages, including those with limited data.

Outcome Summary

In conclusion, “how to coding AI language translation” provides a complete overview of the process of creating language translation systems, from the foundational concepts to the advanced techniques. We’ve explored the technical aspects, ethical considerations, and the future trajectory of this dynamic field. As AI translation continues to evolve, it promises to reshape how we communicate and interact on a global scale, fostering understanding and bridging cultural divides.